Multi-Output Physics-Informed Neural Networks for Forward and Inverse PDE Problems with Uncertainties

Hildebrand Department of Petroleum and Geosystems Engineering

Department of Aerospace Engineering and Engineering Mechanics

Oden Institute for Computational Engineering and Science

The University of Texas at Austin

August 18, 2023

Physics Informed Neural Networks

PINNs

Introduced in (Raissi, Perdikaris, and Karniadakis 2019) as a general method for solving partial differential equations.

Already recieved >5900 citations since posting on arXiv in 2018!

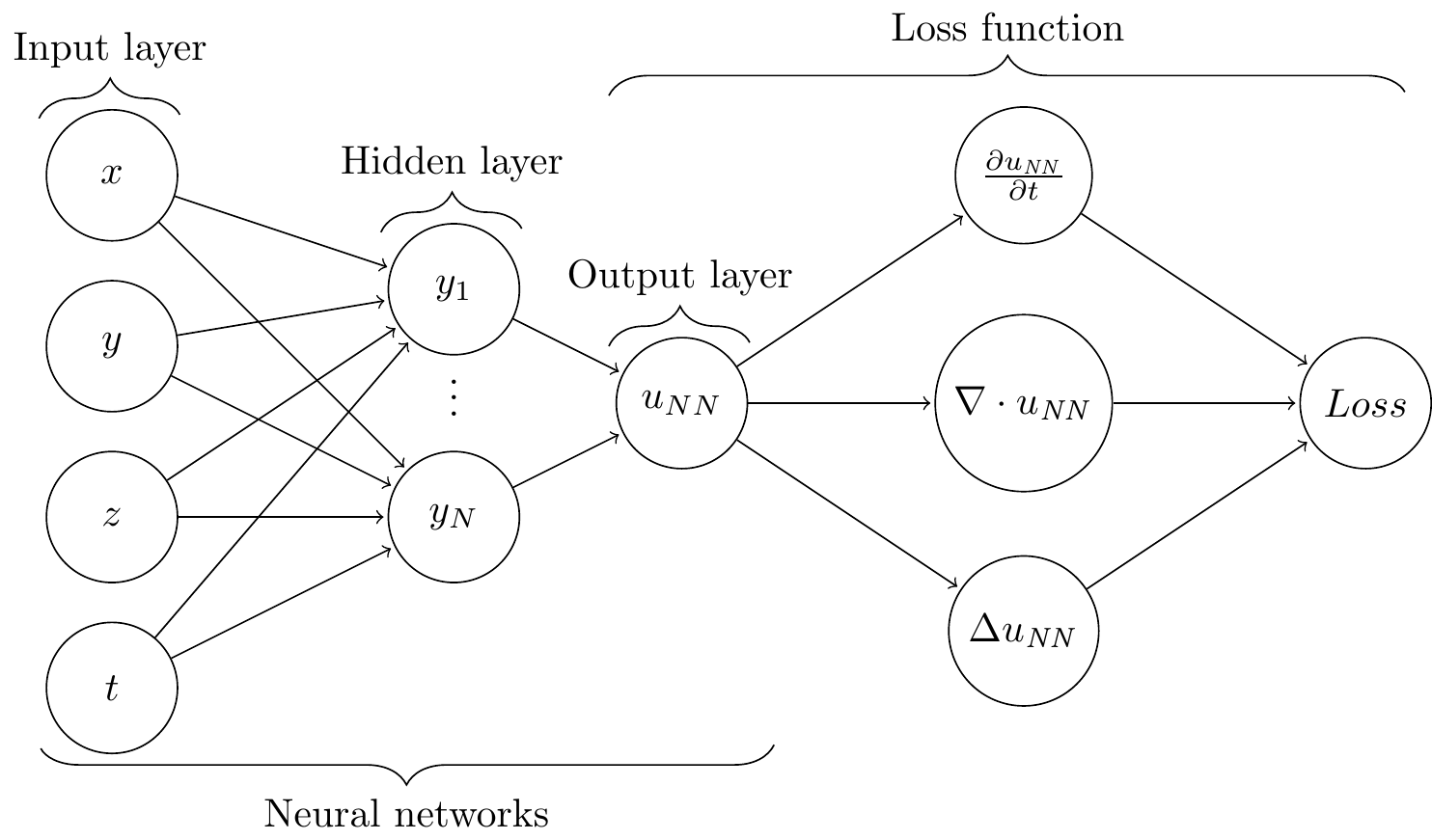

Generic PINN architecture

Loss function for generic PINN system

\[ \begin{align} r_d(u_{NN}, f_{NN}) &= \mathcal{L} u_{NN} - f_{NN},\qquad x \in \Omega \\ r_e(u_{NN}) &= u_{NN} - h,\qquad x \in \partial \Omega_h \\ r_n(u_{NN}) &= \frac{\partial u_{NN}}{\partial x} - g,\qquad x \in \partial \Omega_g \\ r_{u}(u_{NN}) &= u_{NN}(x^u_i) - u_m(x^u_i), \qquad i=1,2,...,n \\ r_{f}(f_{NN}) &= f_{NN}(x^f_i) - f_m(x^f_i), \qquad i=1,2,...,m \end{align} \]

\[ \begin{gather} L_{MSE} = \frac{1}{N}\sum_{i}^N r_d^2 + \frac{1}{N_e}\sum_{i=1}^{N_e} r_e^2 + \frac{1}{N_n}\sum_{i=1}^{N_n} r_n^2 + \frac{1}{n}\sum_{i=1}^{n} r_{u}^2 + \frac{1}{m}\sum_{i=1}^m r_{f}^2 \end{gather} \]

Extensions of PINNs for UQ

- B-PINNs (L. Yang, Meng, and Karniadakis 2021)

- E-PINN (Jiang et al. 2022)

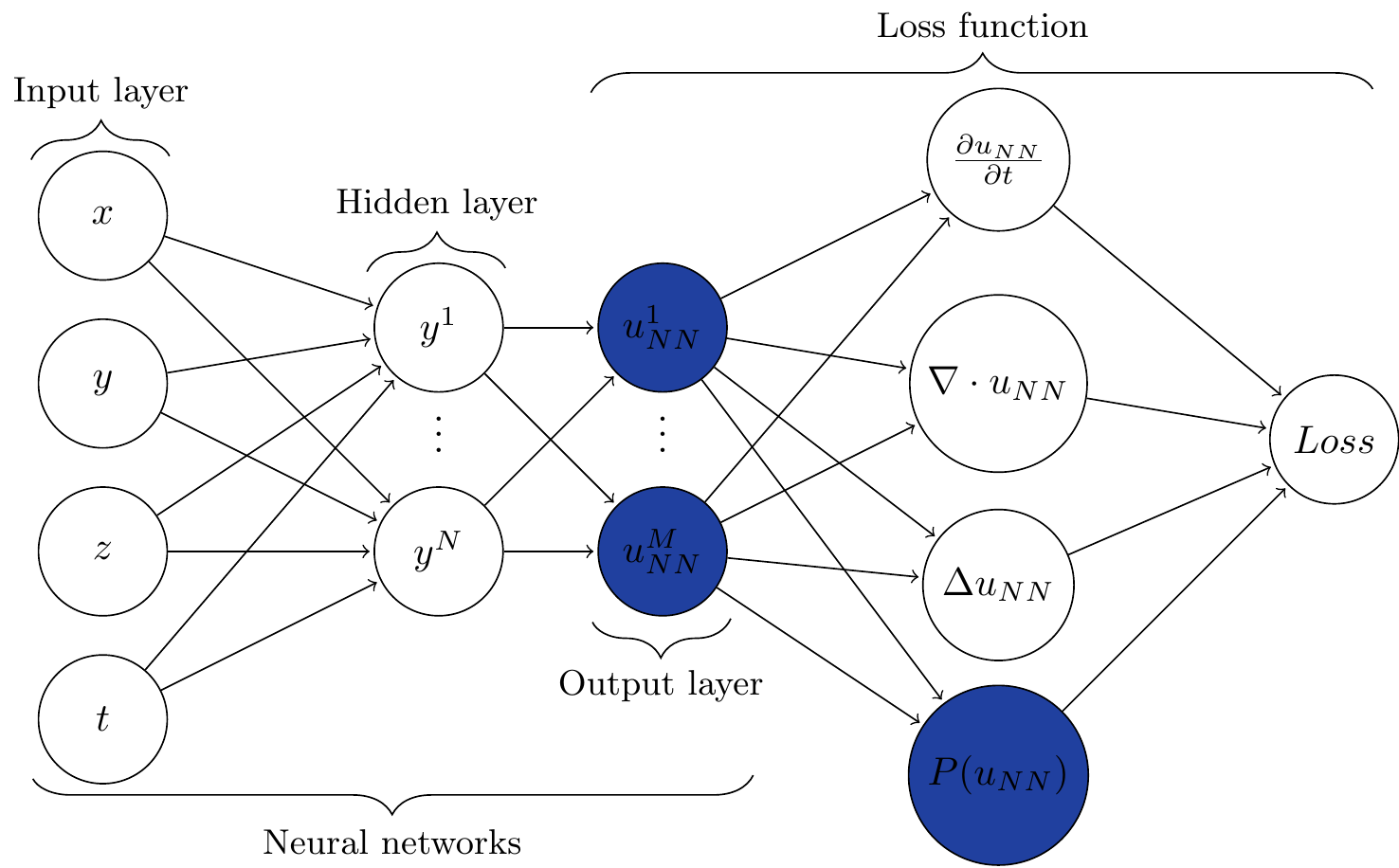

Multi-Output PINN

MO-PINN (M. Yang and Foster 2022)

Loss function for generic MO-PINN system

\[ \begin{align} r_d(u_{NN}^j, f_{NN}^j) &= \mathcal{L} u_{NN}^j - f_{NN}^j,\qquad x \in \Omega \\ r_e(u_{NN}^j) &= u_{NN}^j - h,\qquad x \in \partial \Omega_h \\ r_n(u_{NN}^j) &= \frac{\partial u_{NN}^j}{\partial x} - g,\qquad x \in \partial \Omega_g \\ r_{um}(u_{NN}^j) &= u_{NN}^j(x^u_i) - \left(u_m(x^u_i) + \sigma_u^j\right), \qquad i=1,2,...,n \\ r_{fm}(f_{NN}^j) &= f_{NN}^j(x^f_i) - \left(f_m(x^f_i) + \sigma_f^j\right), \qquad i=1,2,...,m \ \end{align} \]

\[ \begin{gather} L_{MSE} = \frac{1}{M}\sum_{j=1}^{M} \left( \frac{1}{N}\sum_{i}^N r_d^2 + \frac{1}{N_e}\sum_{i=1}^{N_e} r_e^2 + \frac{1}{N_n}\sum_{i=1}^{N_n} r_n^2 + \frac{1}{n}\sum_{i=1}^{n} r_{um}^2 + \frac{1}{m}\sum_{i=1}^m r_{fm}^2 \right) \end{gather} \]

Forward PDE problems

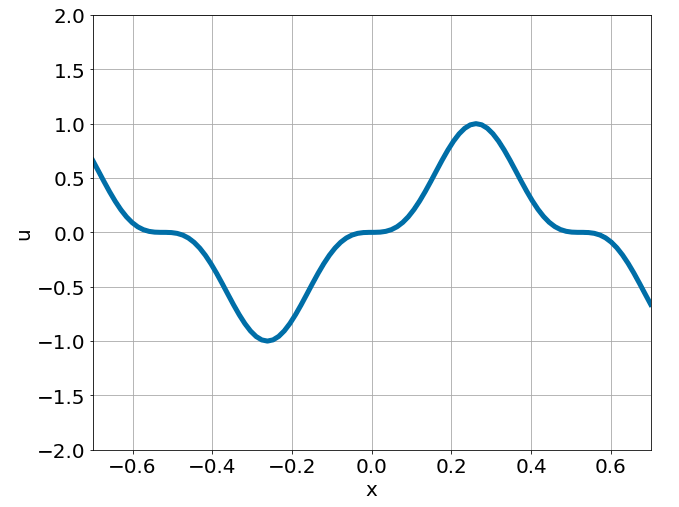

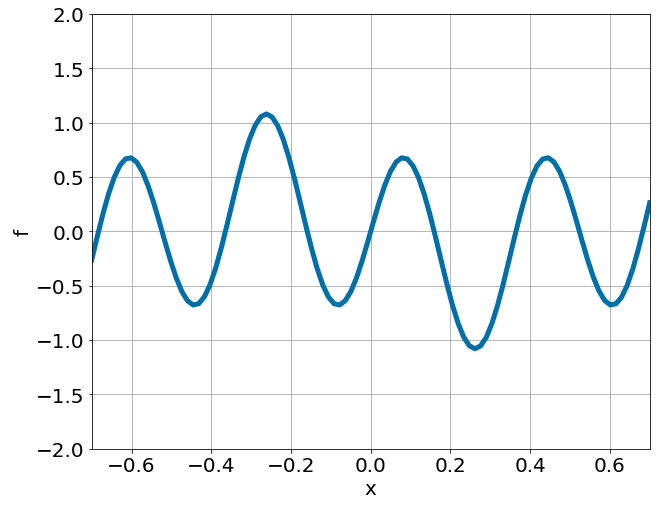

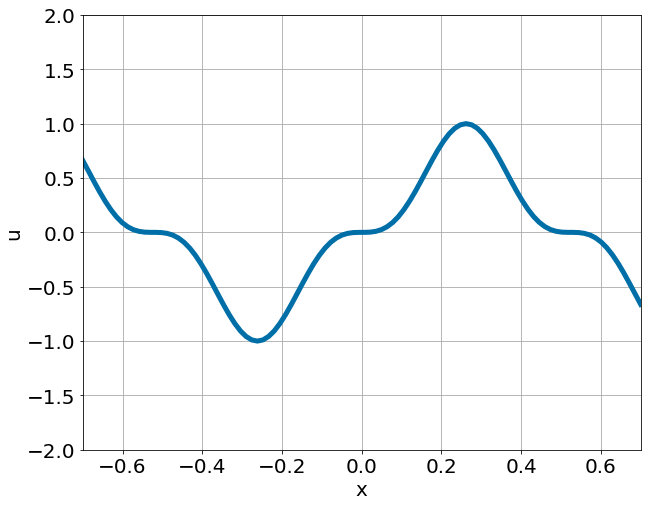

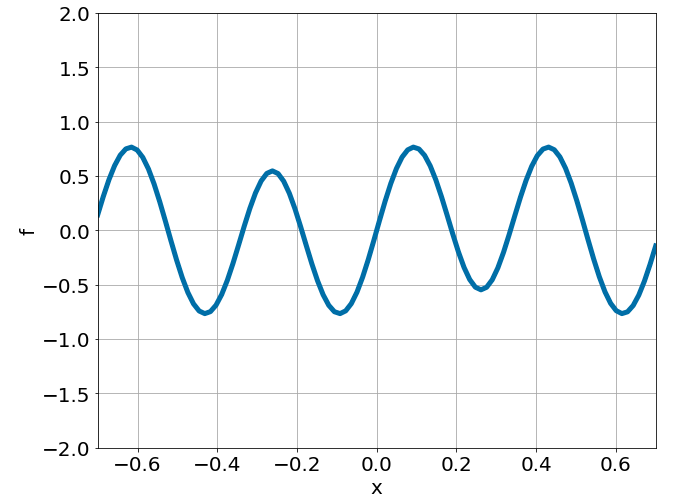

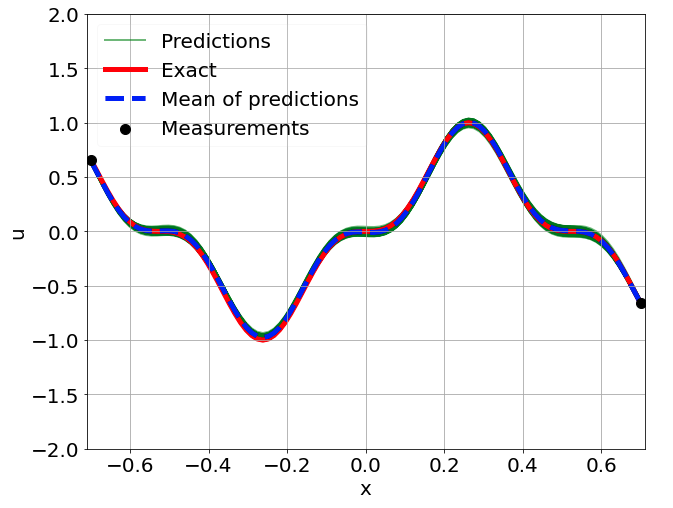

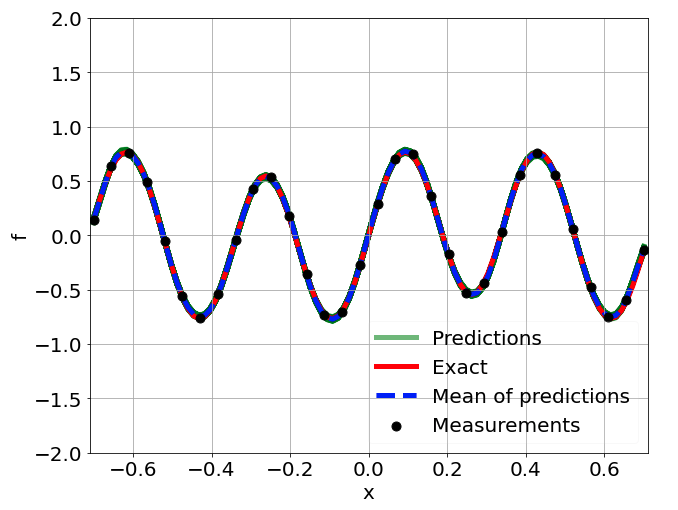

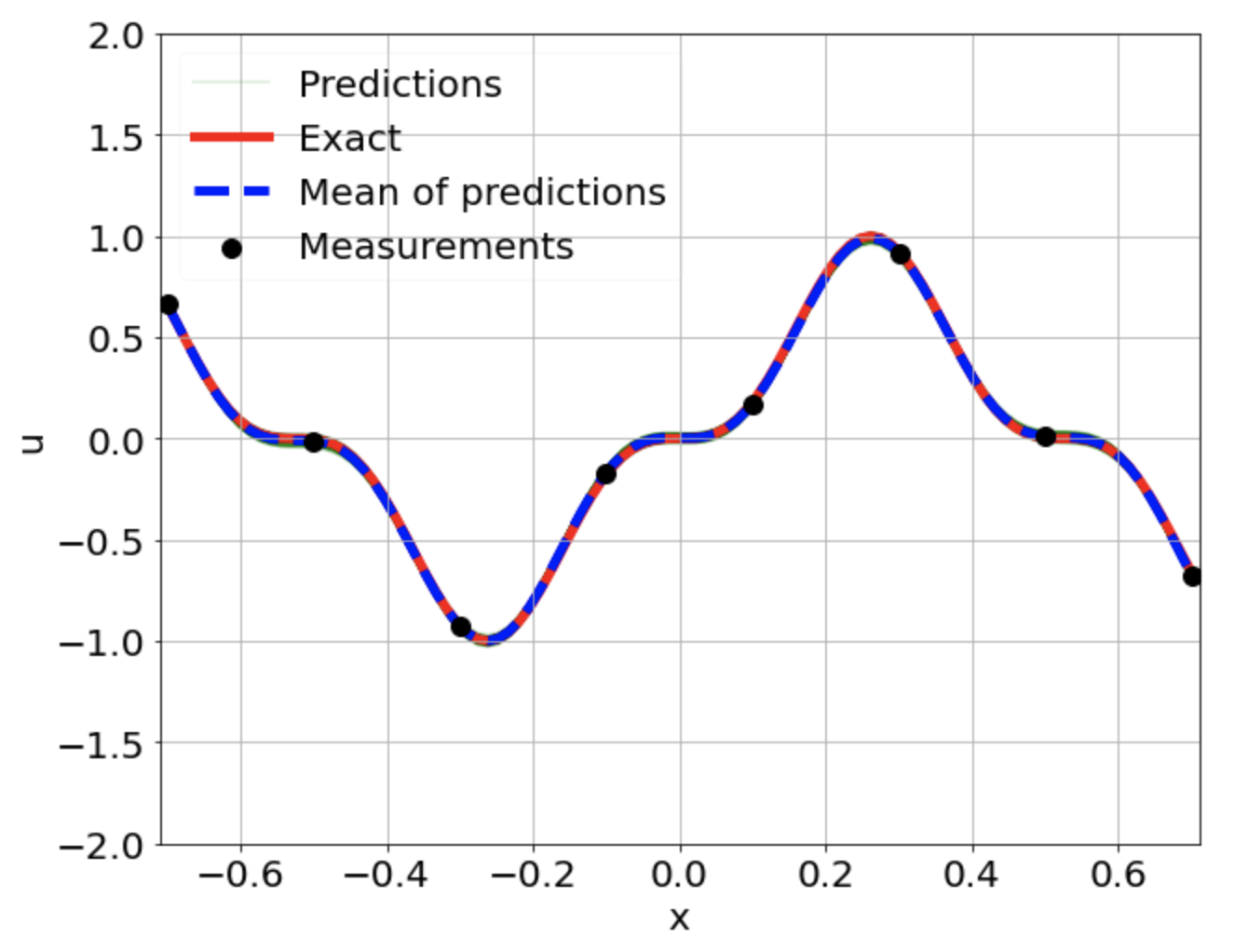

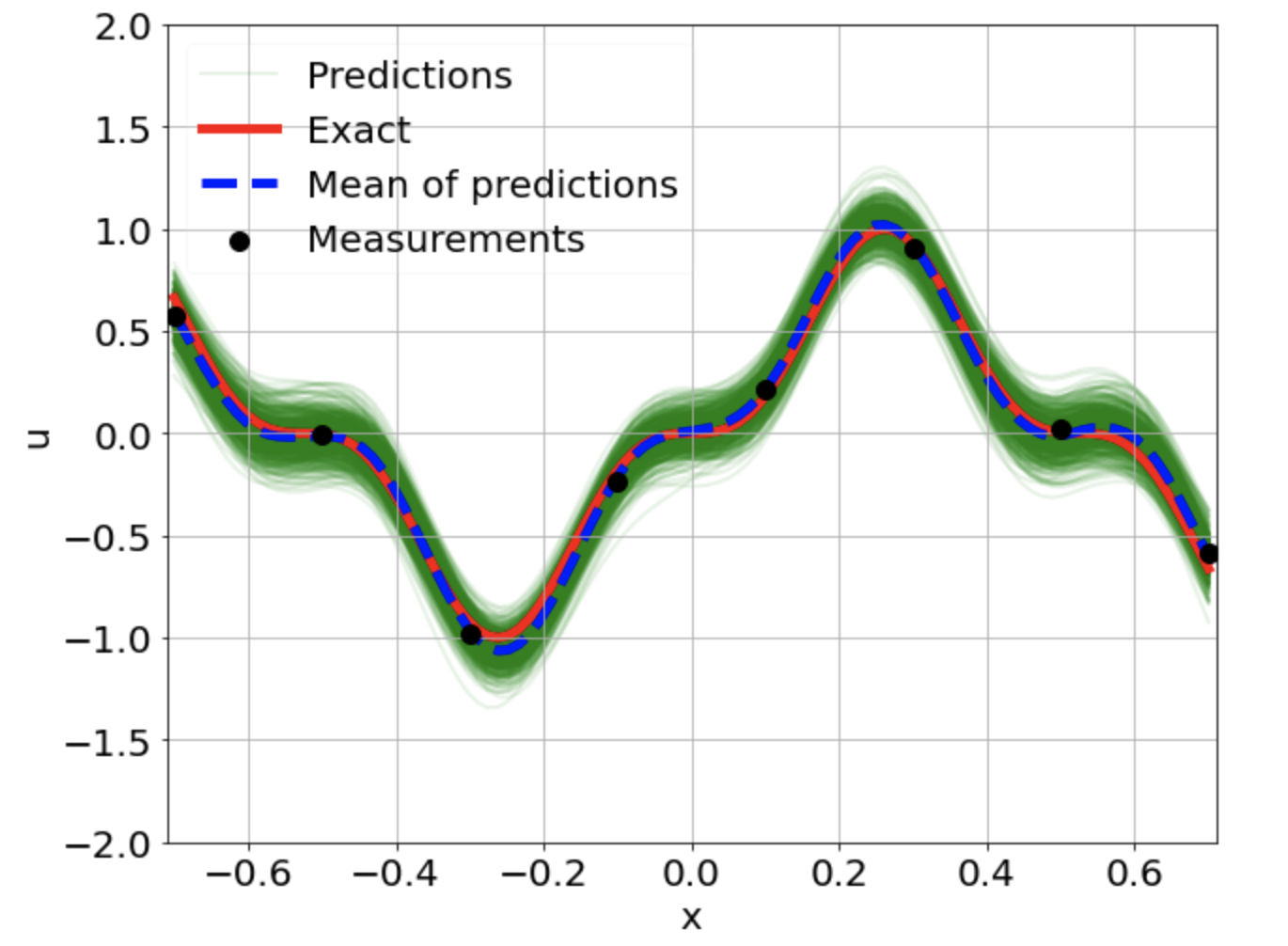

One-dimensional linear Poisson equation

\[ \begin{gathered} \lambda \frac{\partial^2 u}{\partial x^2} = f, \qquad x \in [-0.7, 0.7] \end{gathered} \] where \(\lambda = 0.01\) and \(u=\sin^3(6x)\)

Network architecture and hyperparameters

2 neural networks: \(u_{NN}\) and \(f_{NN}\)

2 hidden layers with 20 and 40 neurons each

\(\tanh\) activation function

ADAM optimizer

\(10^{-3}\) learning rate

Xavier normalization

10000 epochs

500 outputs

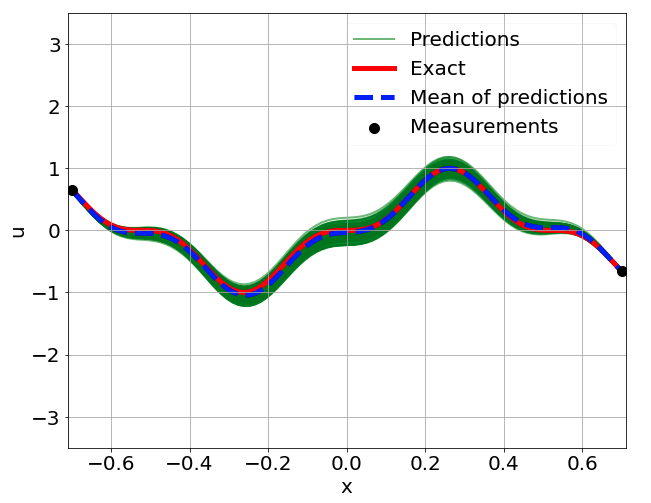

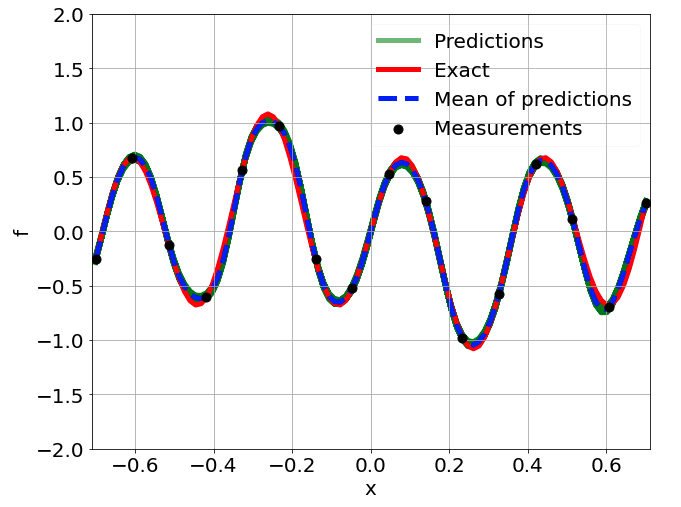

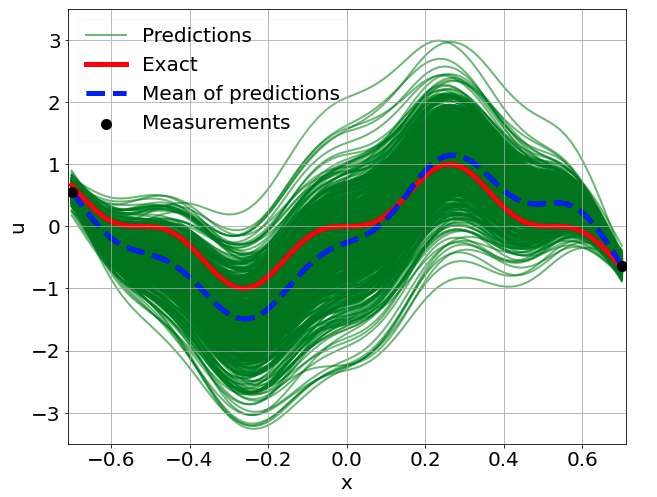

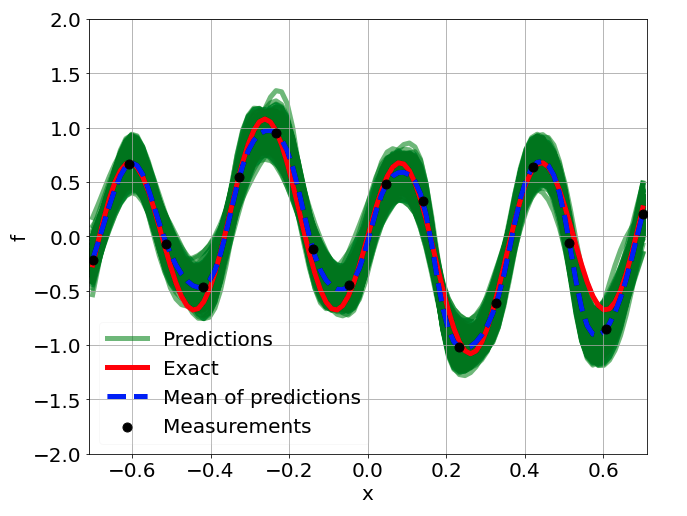

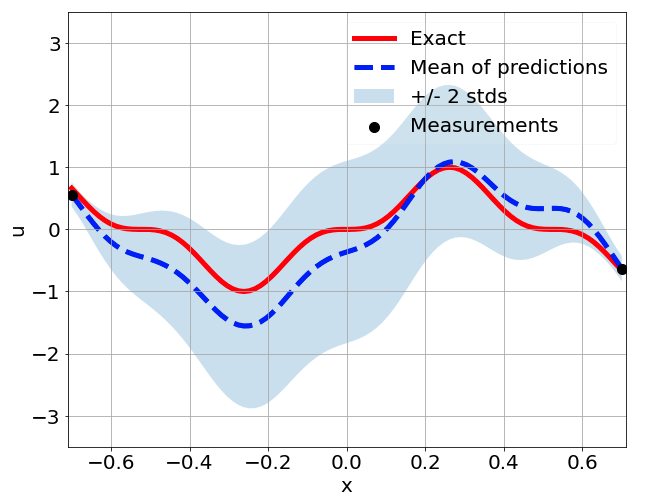

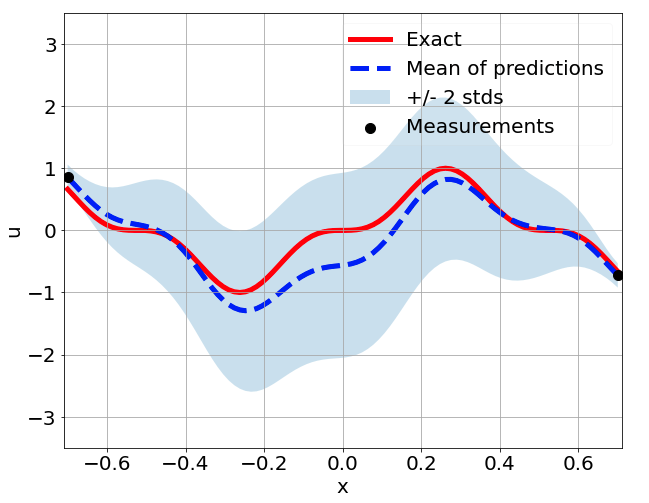

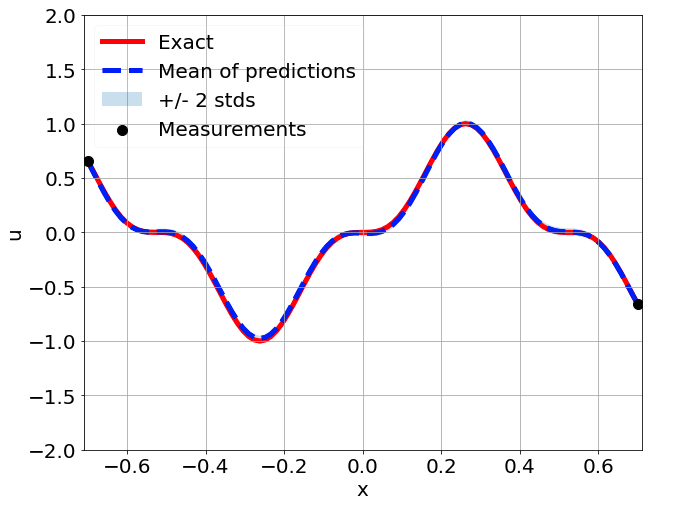

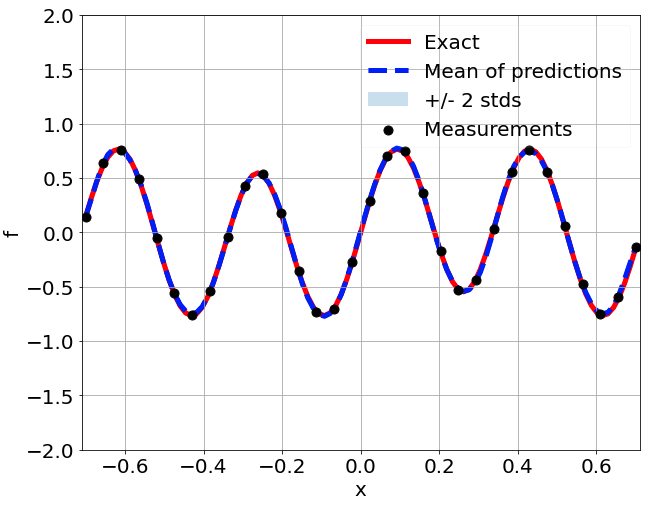

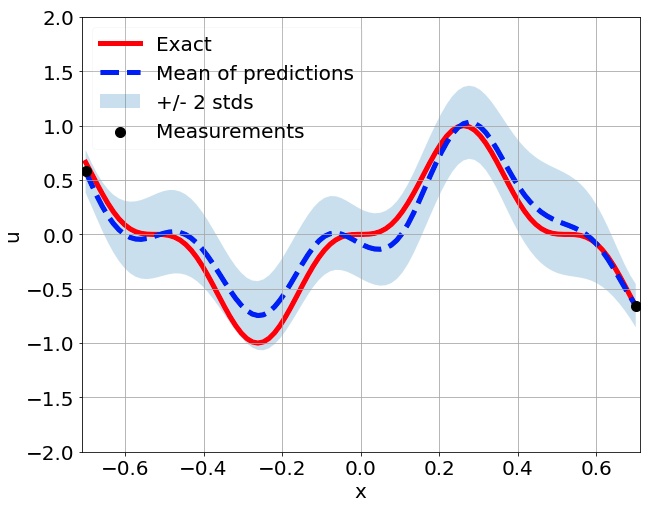

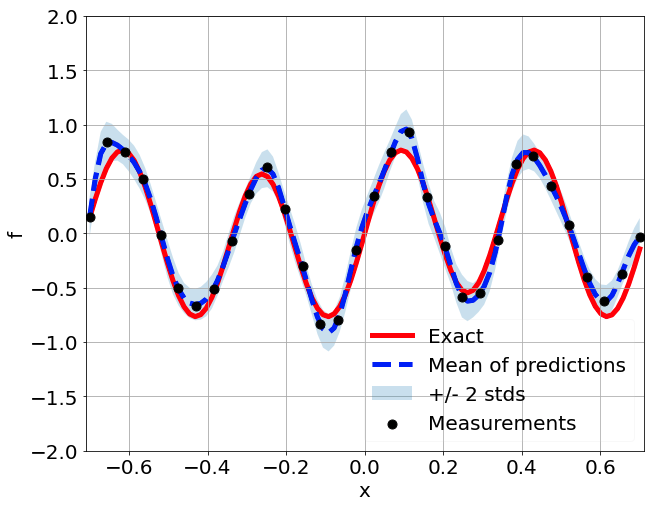

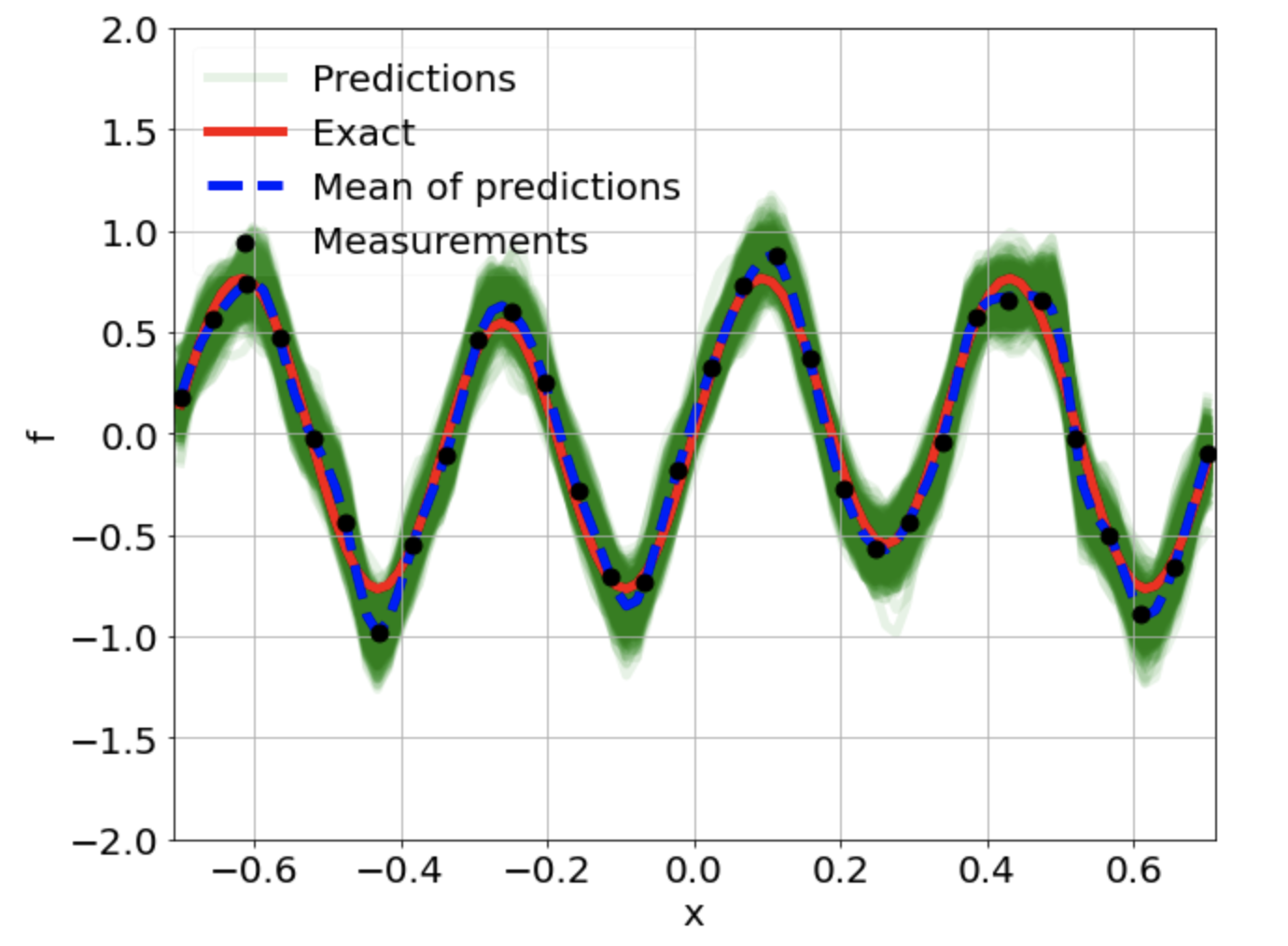

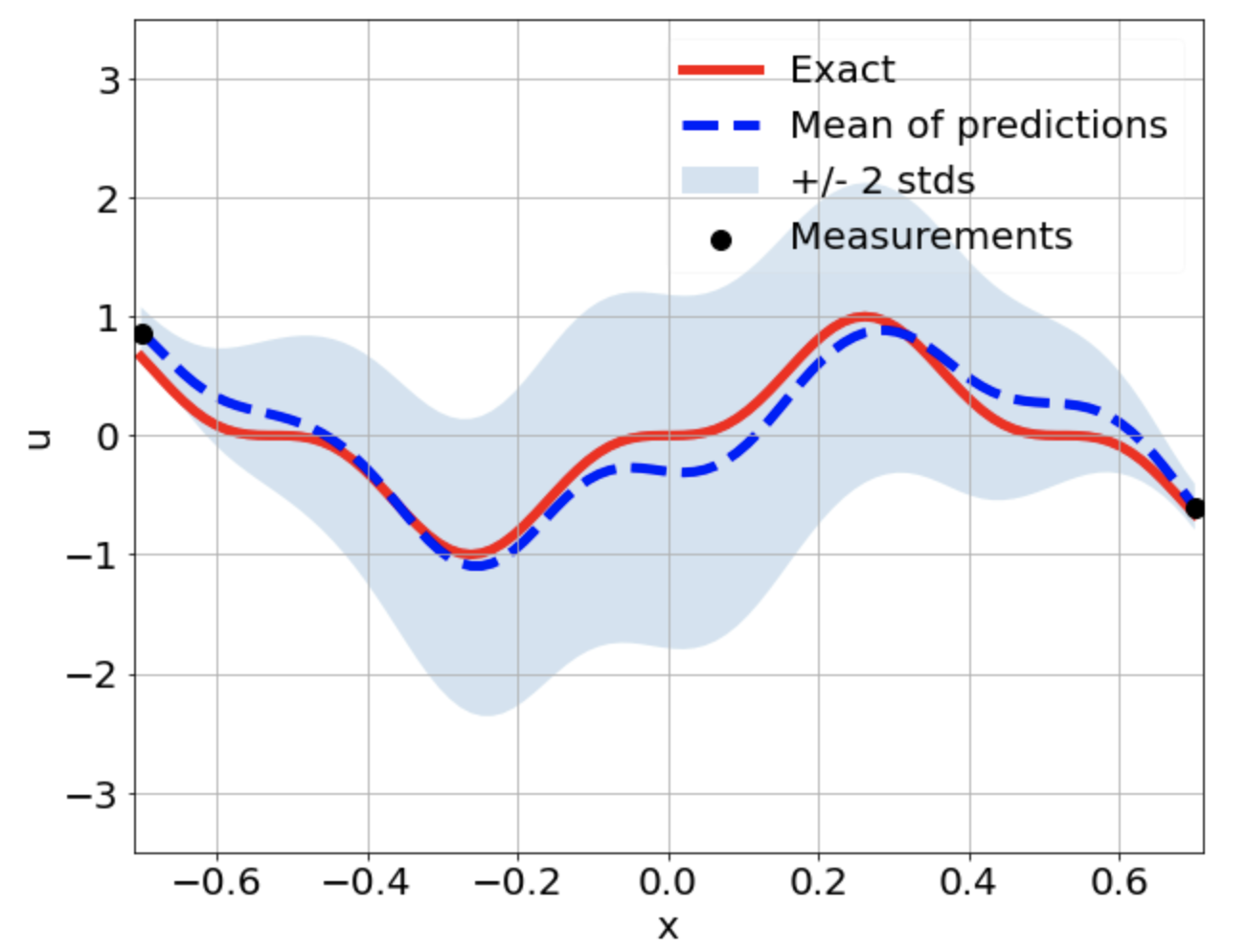

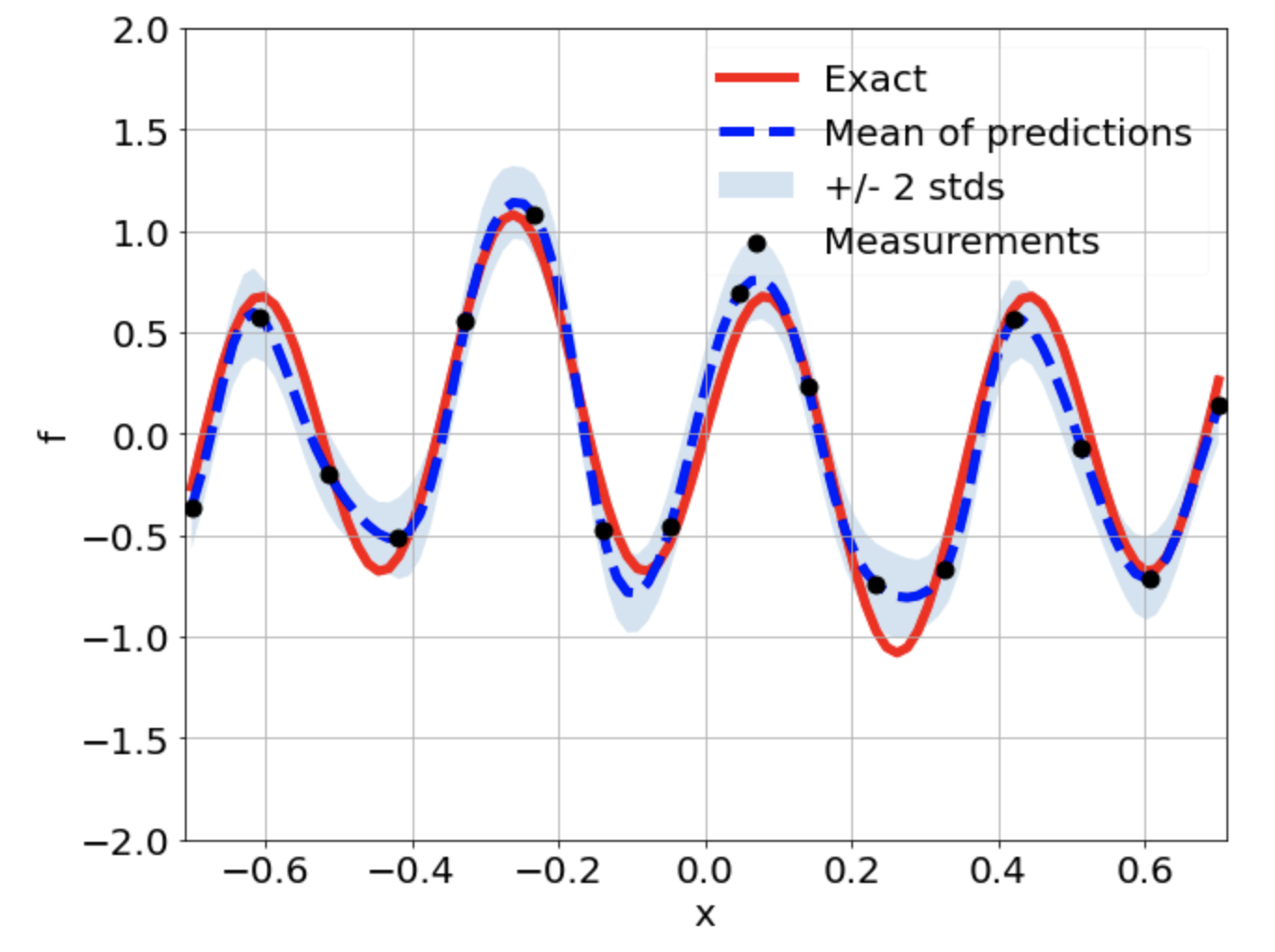

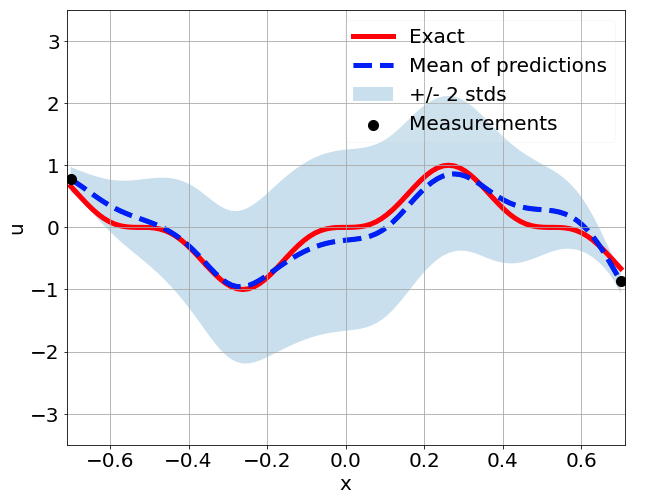

Predictions w/ \(\sigma = 0.01\) noise on measurements

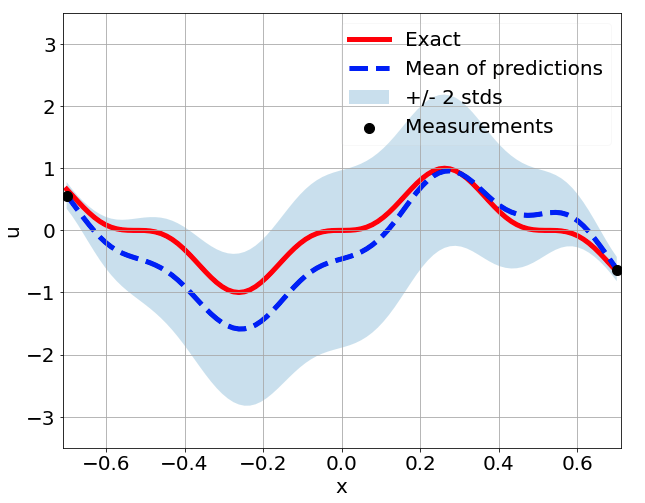

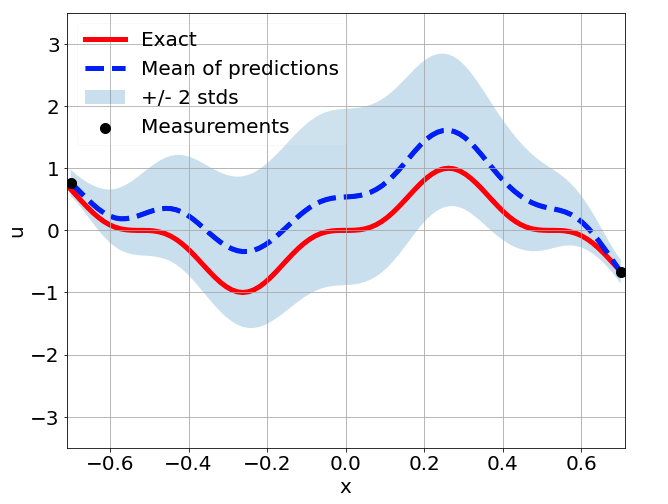

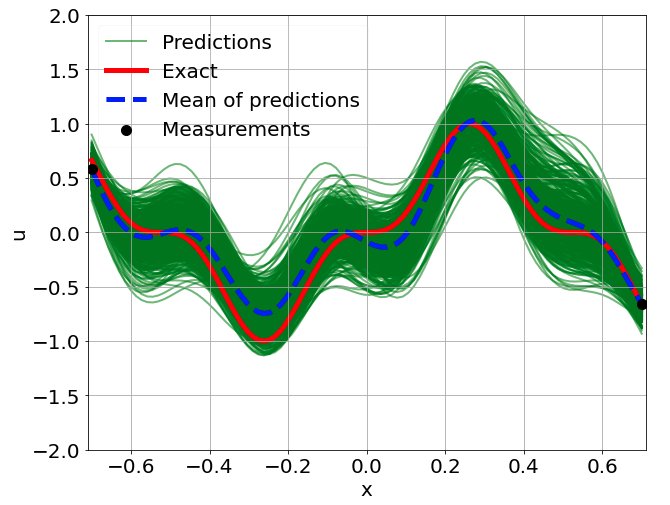

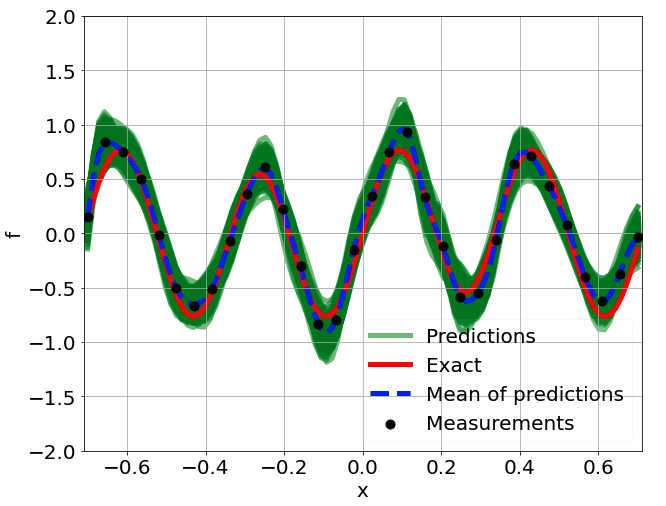

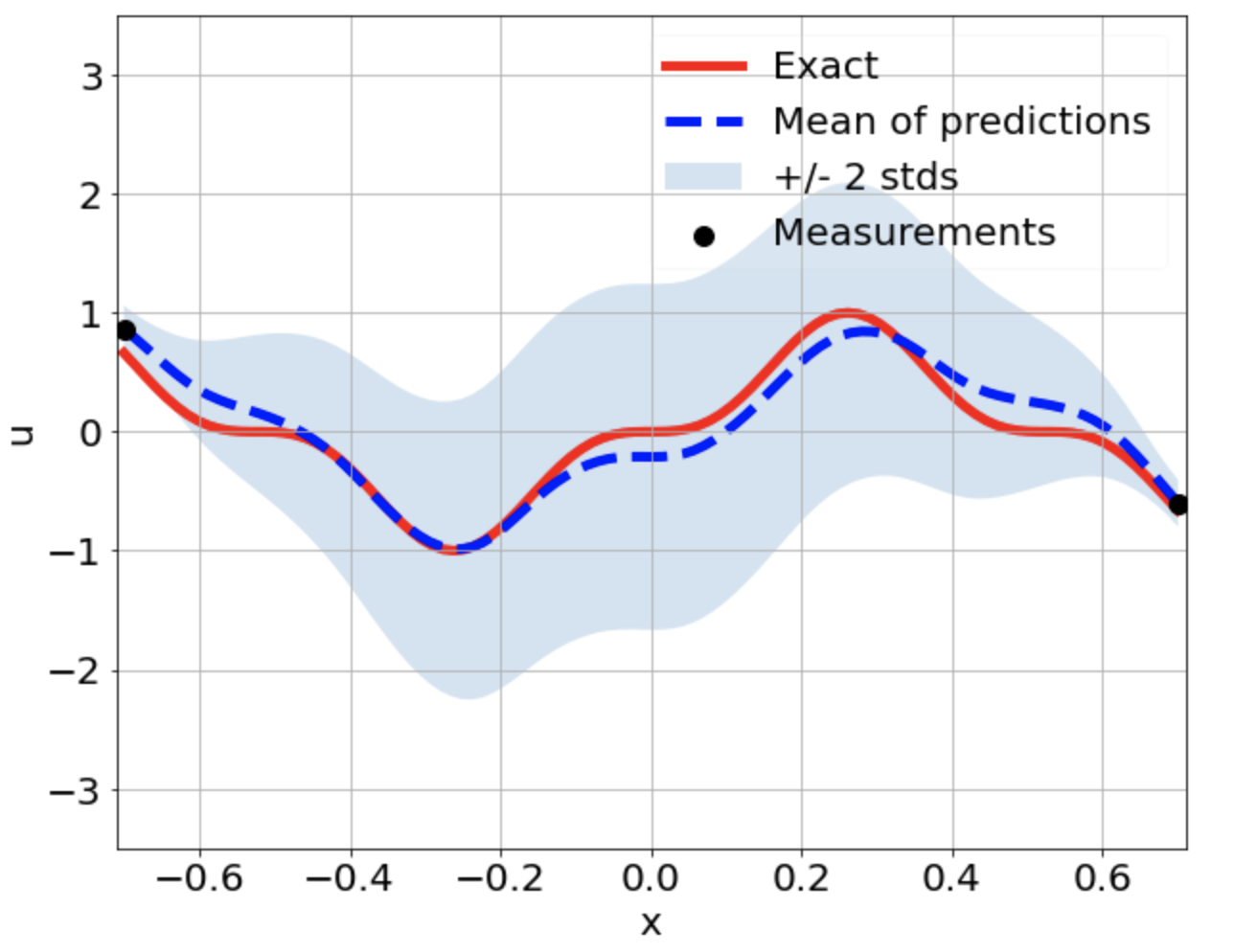

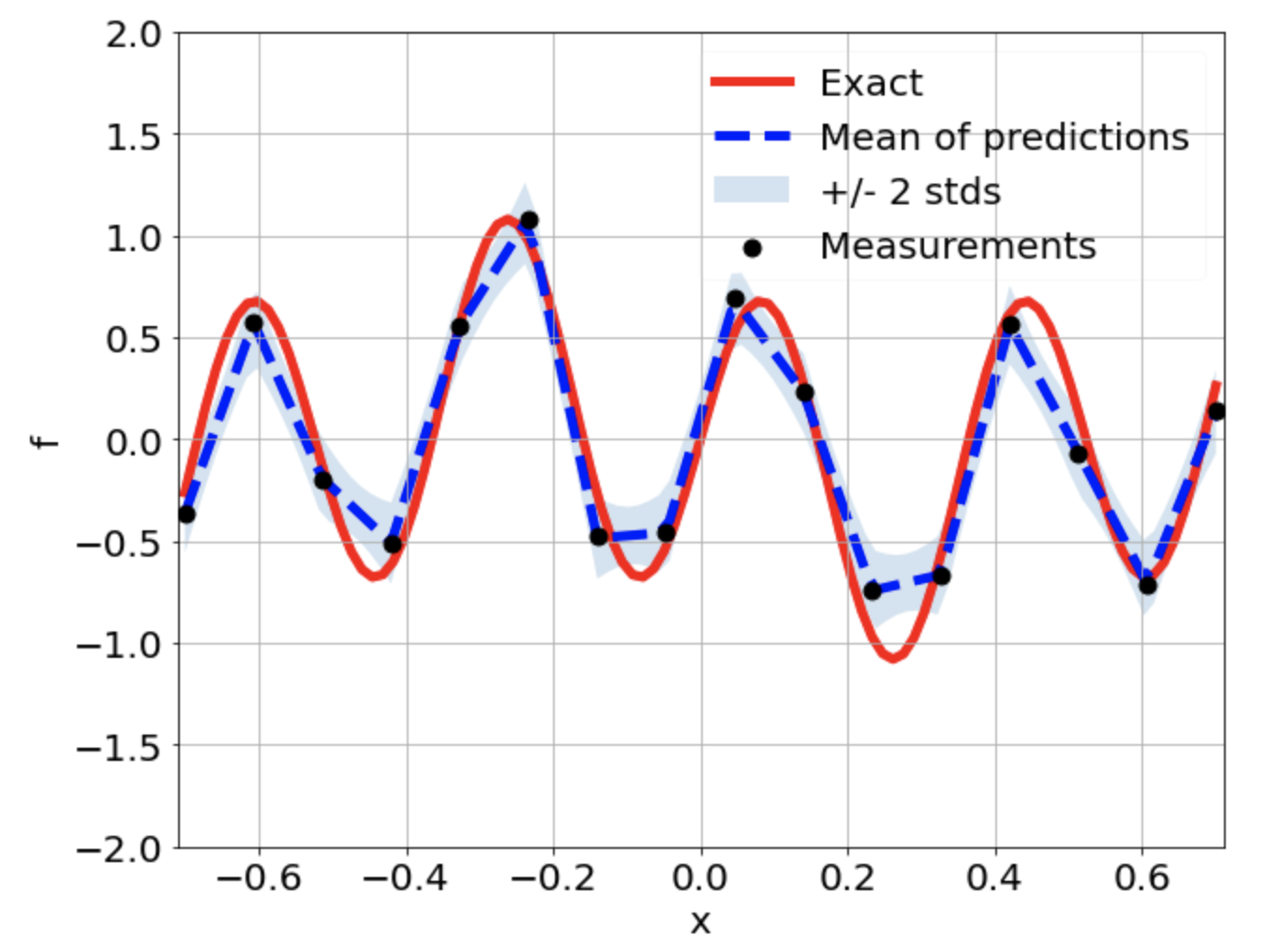

Predictions w/ \(\sigma = 0.1\) noise on measurements

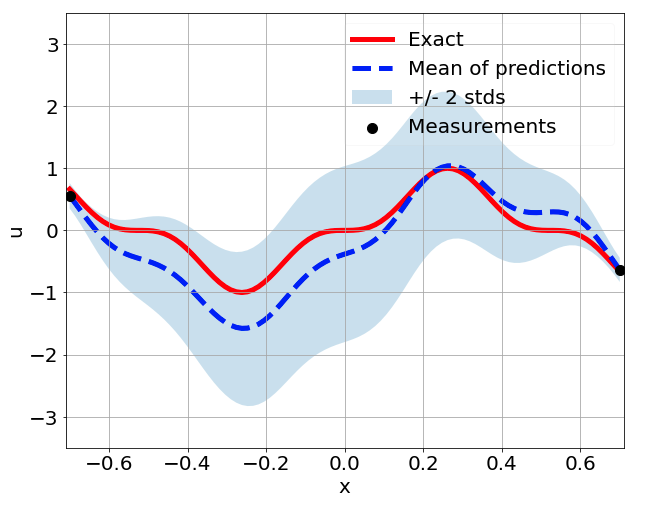

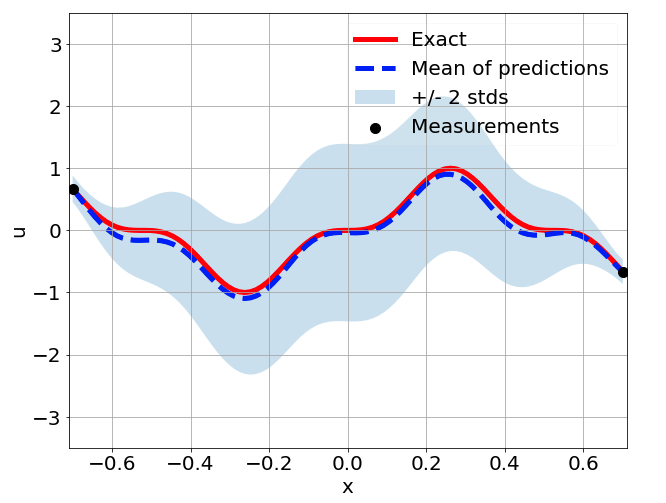

Sensitivity to random network parameter initialization

\(\sigma = 0.1\) noise

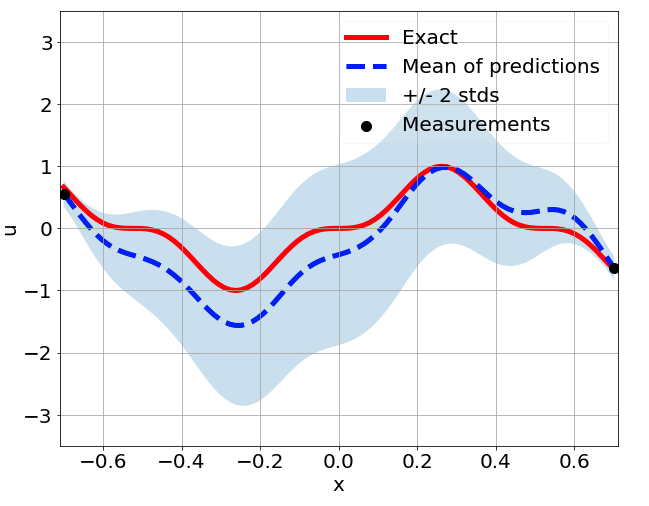

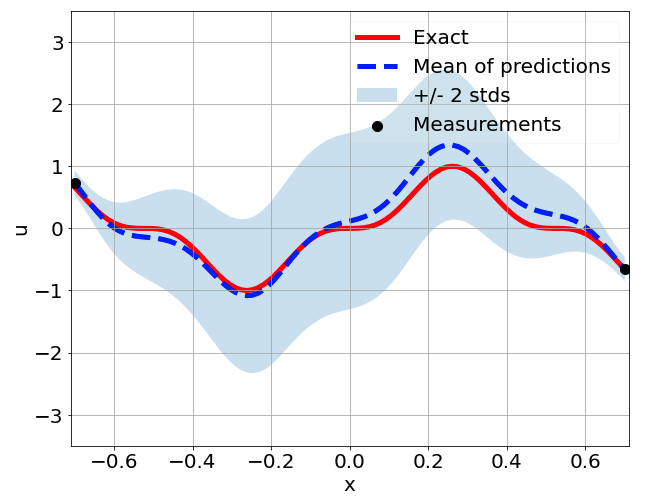

Sensitivity to measurement sampling

\(\sigma = 0.1\) noise

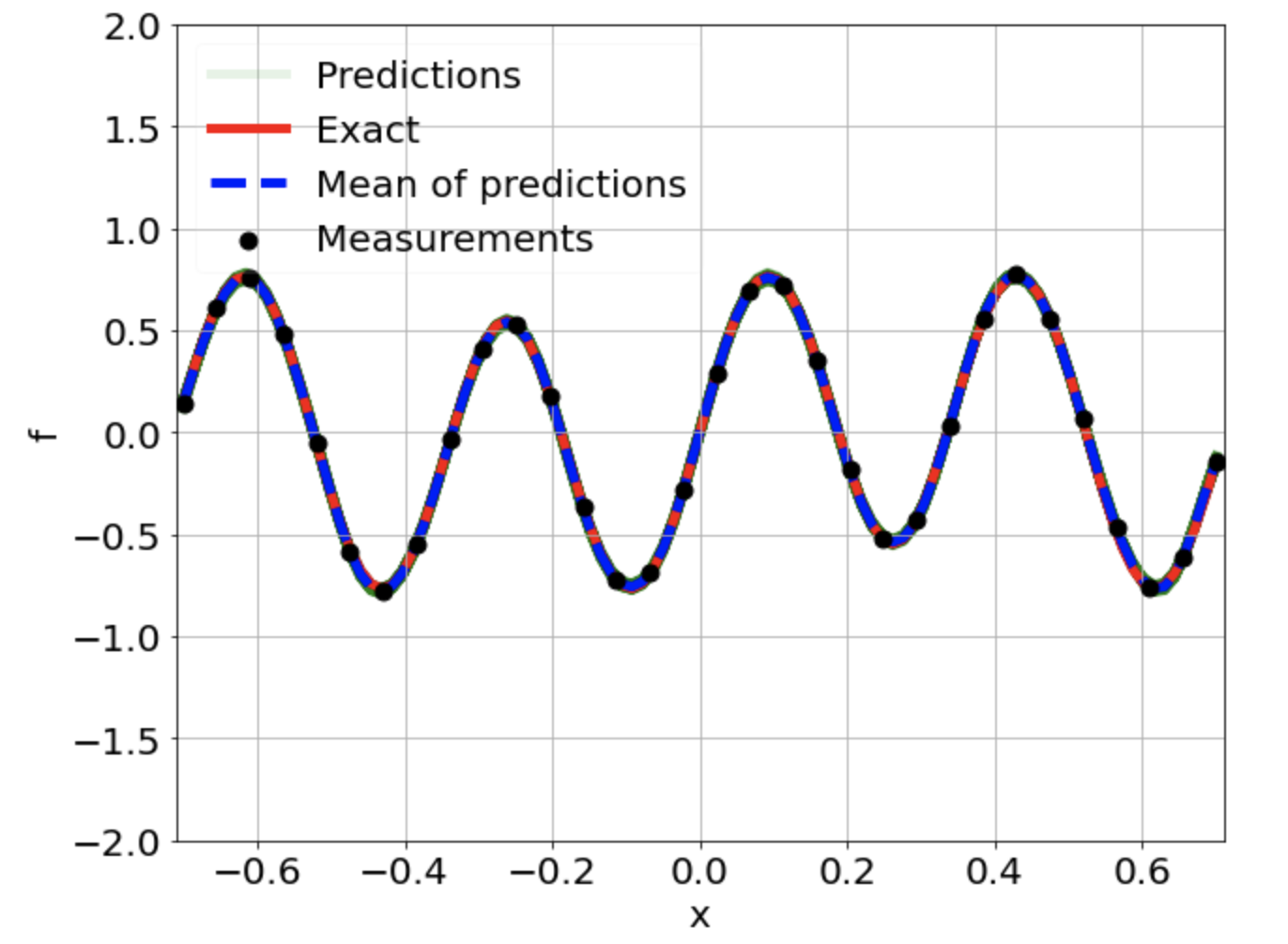

One-dimensional nonlinear Poisson equation

\[ \begin{gathered} \lambda \frac{\partial^2 u}{\partial x^2} + k \tanh(u) = f, \qquad x \in [-0.7, 0.7] \end{gathered} \] where \(\lambda = 0.01, k=0.7\) and \(u=\sin^3(6x)\)

Predictions w/ \(\sigma = 0.01\) noise on measurements

Predictions w/ \(\sigma = 0.1\) noise on measurements

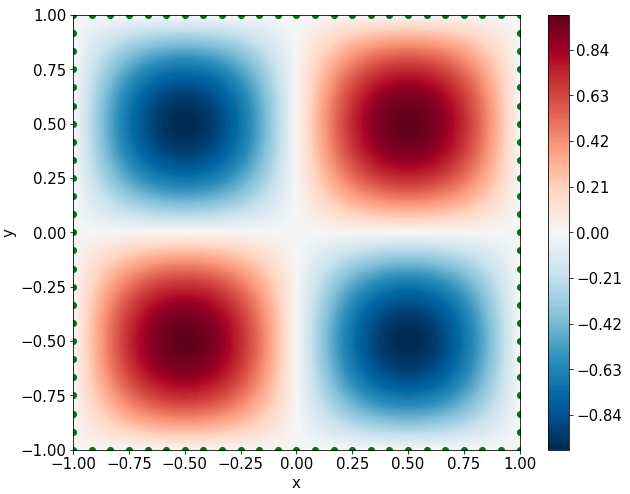

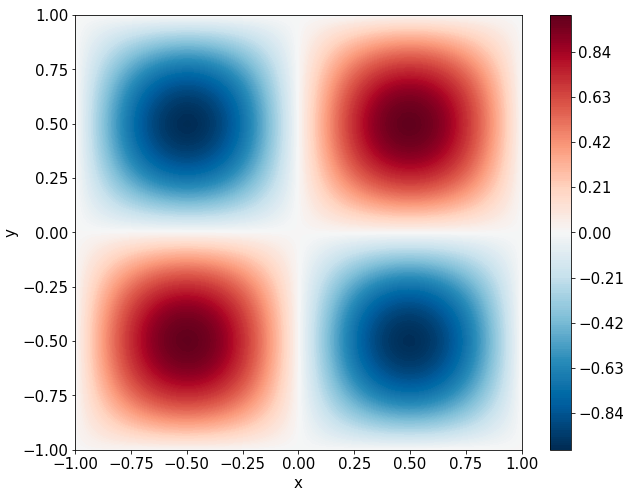

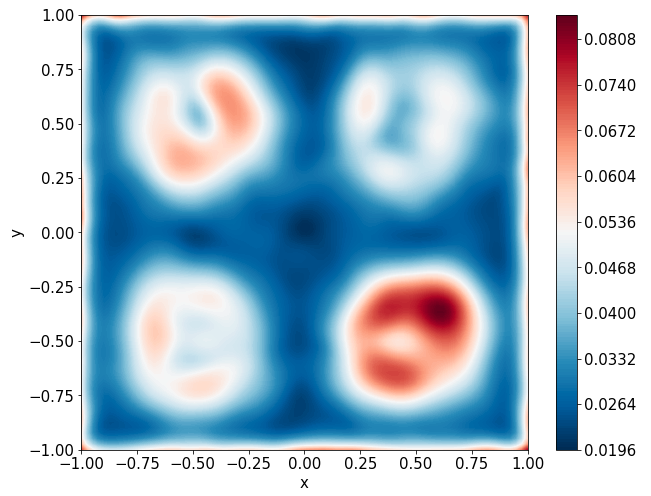

Two-dimensional nonlinear Allen-Cahn equation

\[ \begin{gathered} \lambda \left(\frac{\partial^2 u}{\partial x^2} + \frac{\partial^2 u}{\partial y^2}\right) + u\left(u^2 -1 \right) = f, \qquad x,y \in [-1, 1] \end{gathered} \] where \(\lambda = 0.01\) and \(u=\sin(\pi x)\sin(\pi y)\)

Network architecture and hyperparameters

2 neural networks: \(u_{NN}\) and \(f_{NN}\)

3 hidden layers with 200 neurons each

\(\tanh\) activation function

ADAM optimizer

\(10^{-3}\) learning rate

Xavier normalization

50000 epochs

2000 outputs

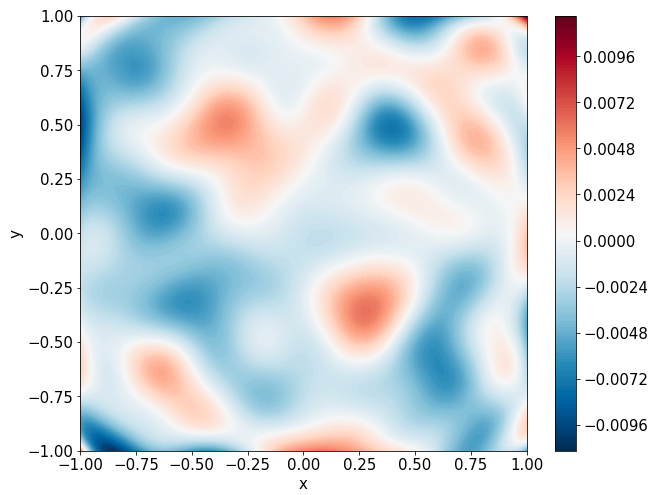

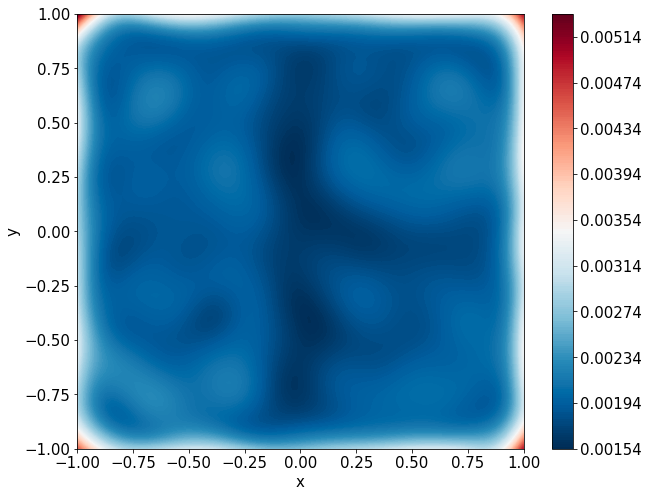

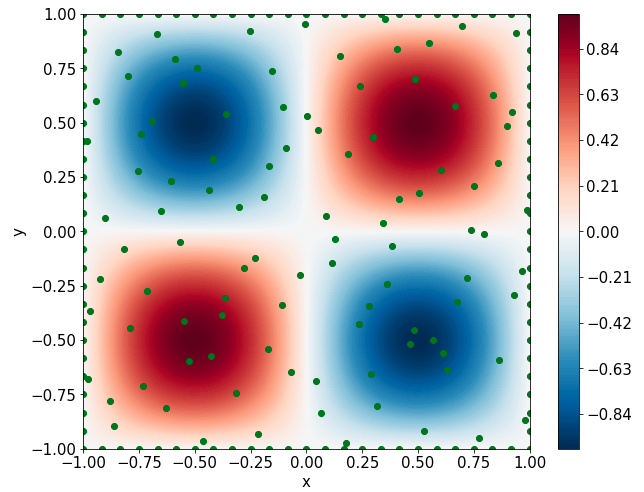

Predictions w/ \(\sigma = 0.01\) noise on measurements

Predictions w/ \(\sigma = 0.1\) noise on measurements

Inverse Problems

One-dimensional nonlinear Poisson equation

\[ \begin{gathered} \lambda \frac{\partial^2 u}{\partial x^2} + k \tanh(u) = f, \qquad x \in [-0.7, 0.7] \end{gathered} \] where \(\lambda = 0.01\).

\(k=[???, ???, ???, \dots, ???]\) with \(N\) entries corresponding to \(N\) outputs of the MO-PINN

Predictions

\(u\) and \(f\)

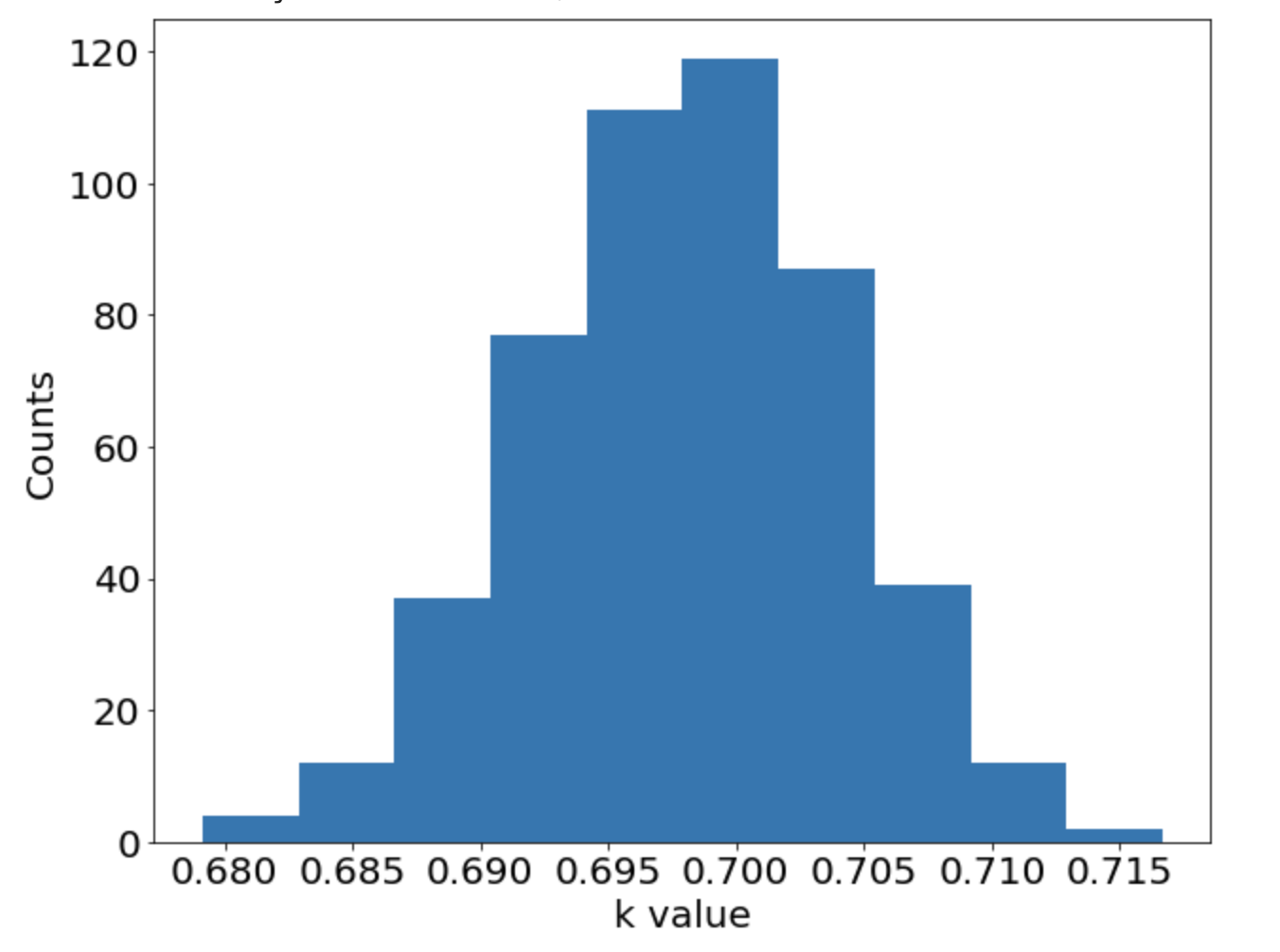

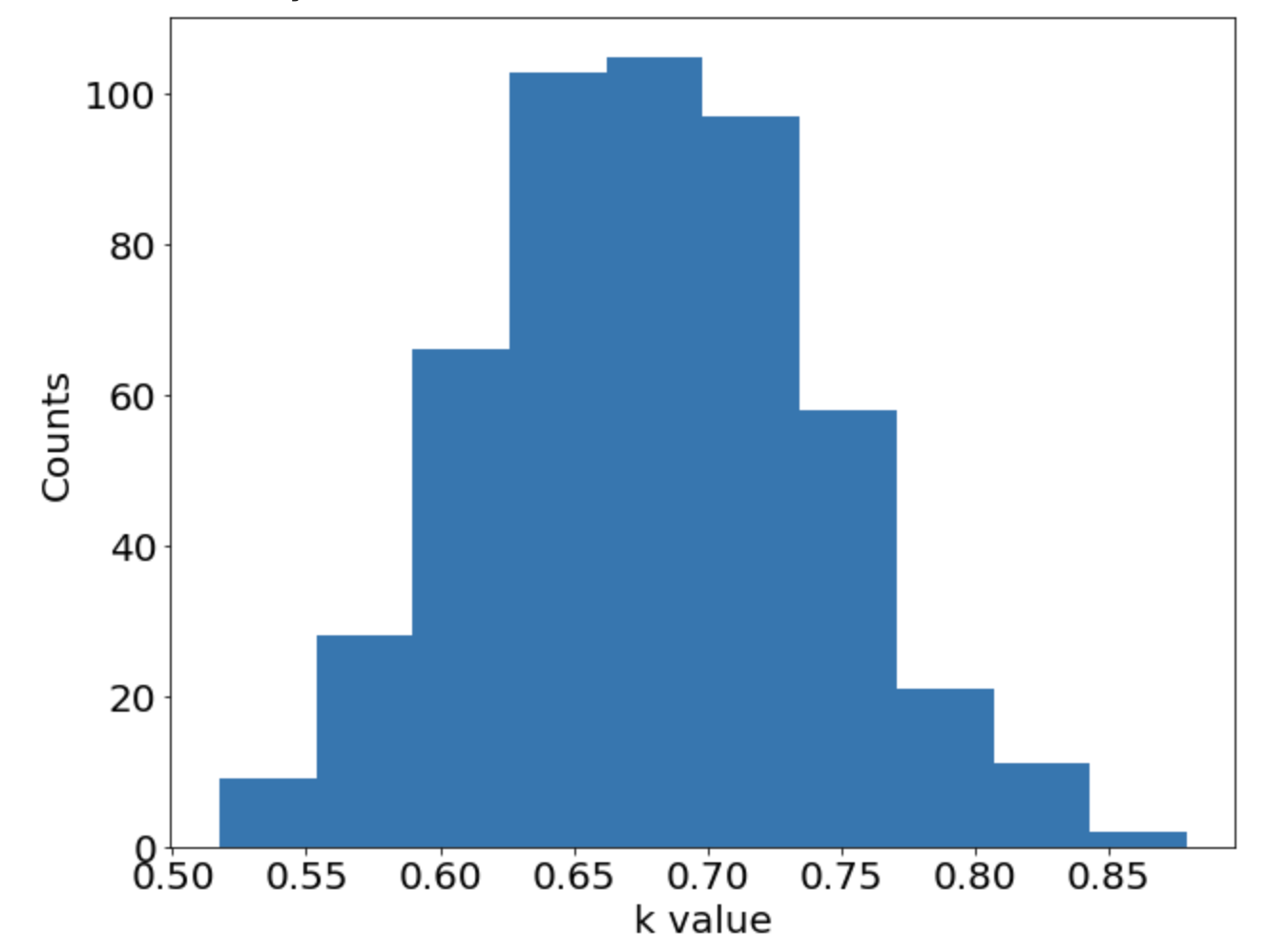

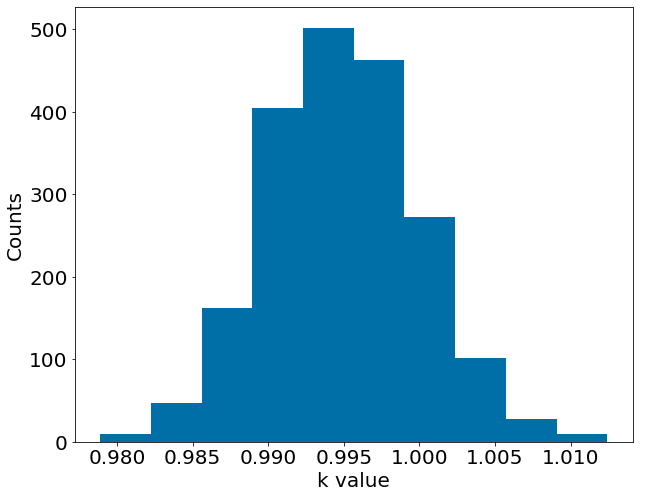

Inverse Estimates

\(k_{exact} = 0.7\)

Sensitivity of \(k_{avg}\) w.r.t number of outputs

\(\sigma=0.1\) noise

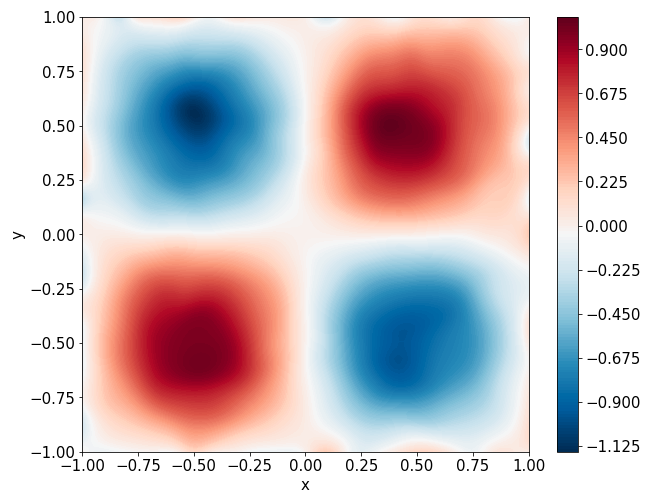

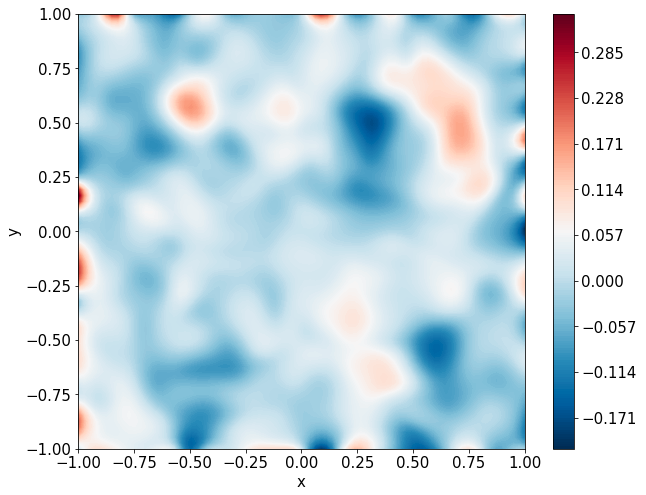

Two-dimensional Allen-Cahn Equation

\[ \begin{gathered} \lambda \left(\frac{\partial^2 u}{\partial x^2} + \frac{\partial^2 u}{\partial y^2}\right) + u\left(u^2 -1 \right) = f, \qquad x,y \in [-1, 1] \end{gathered} \] where \(\lambda = 0.01\) and \(u=\sin(\pi x)\sin(\pi y)\)

\(k=[???, ???, ???, \dots, ???]\) with \(N\) entries corresponding to \(N\) outputs of the MO-PINN

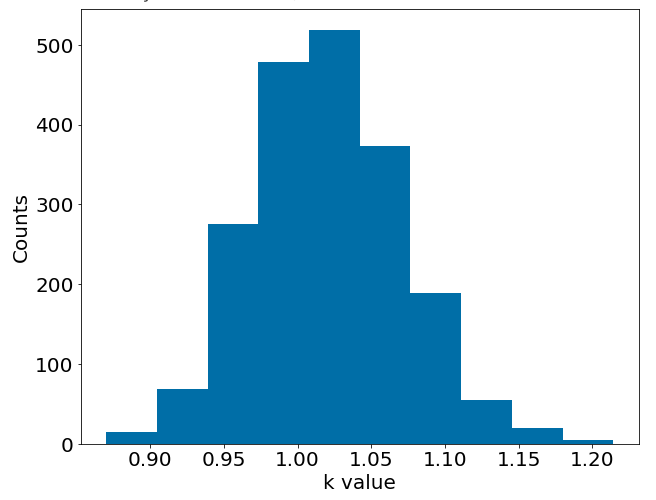

Inverse Estimates

\(k_{exact} = 1.0\)

Incorporating prior statistical knowledge

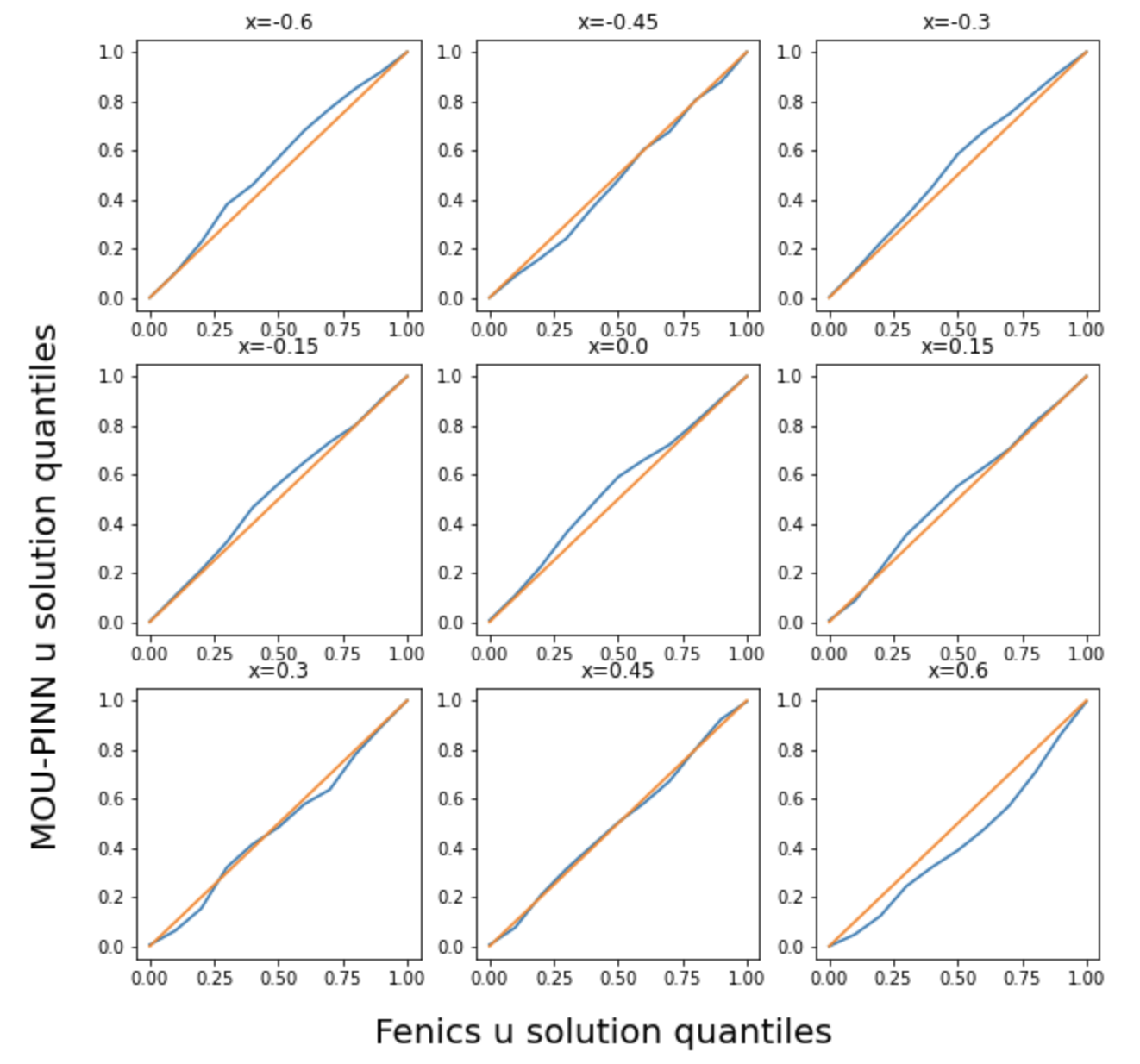

Comparison to Monte Carlo FEM

One-dimensional linear Poisson equation

Comparison of distributions

MO-PINN vs. FEA Monte Carlo

Quantile-quantile plot of \(u\) at 9 locations

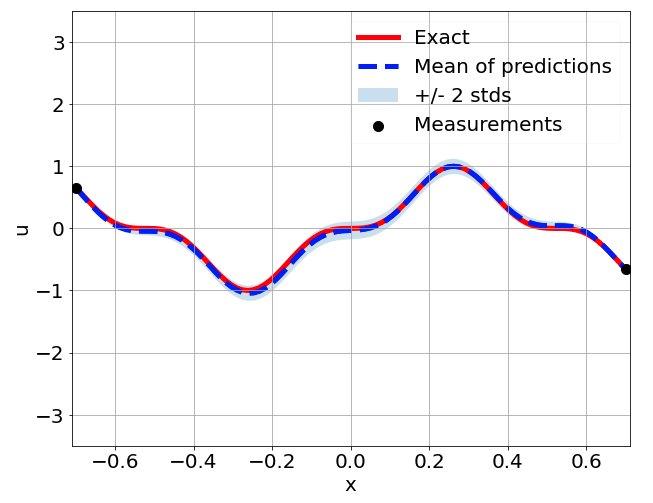

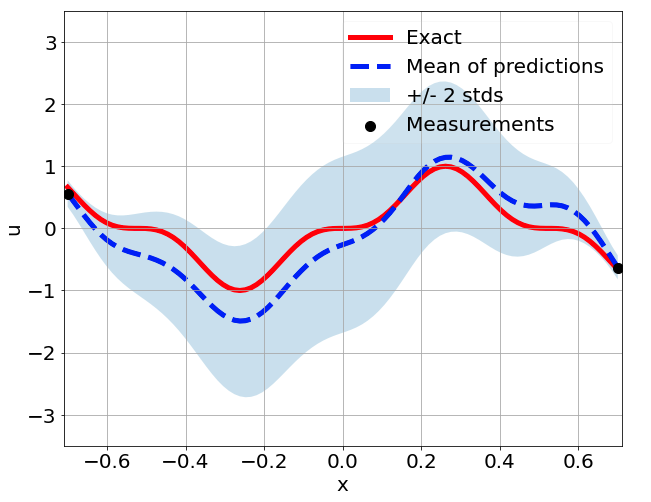

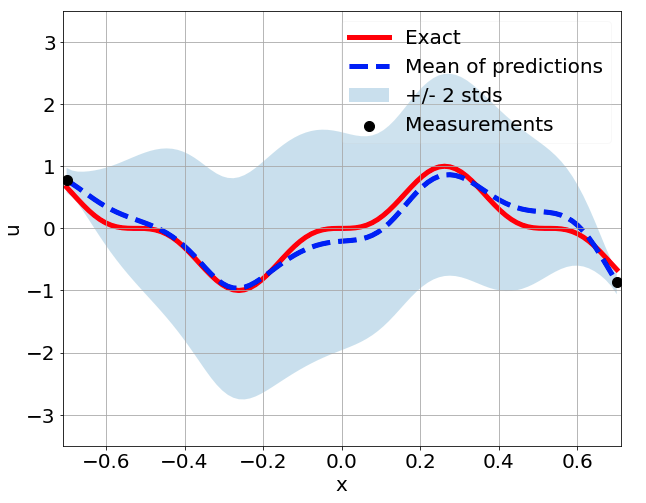

\(u\) predictions with only 5 measurements

Using mean and std to enhance learning

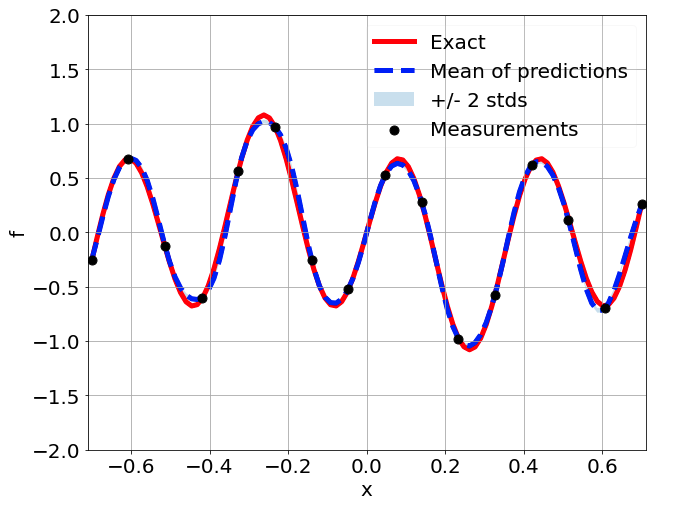

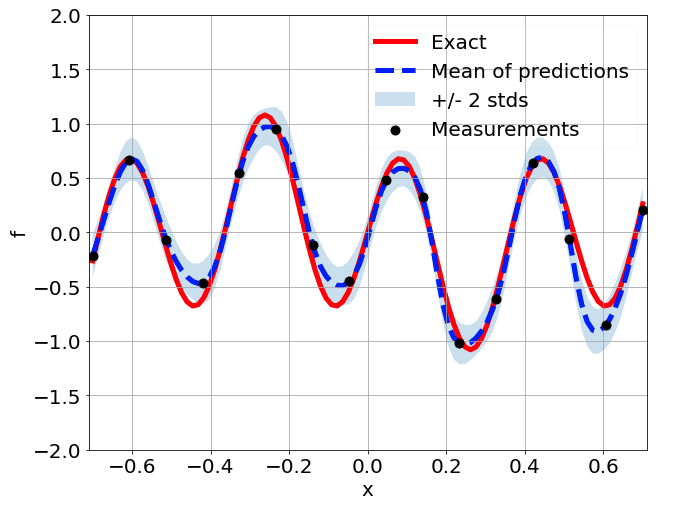

\(f\) predictions with only 5 measurements

Using mean and std to enhance learning

Conclusions

- MO-PINNs appear promising for UQ

- MO-PINNs can learn solution, source terms, and parameters simultaneously

- MO-PINNs are faster than Monte Carlo forward solutions for the problem studied

- Only need to train a single network

References

DiReCT Annual Review Meeting - August 18, 2023