Run Peridigm (and other scientific HPC codes) without building via Docker

UPDATE 6/1/2022: This post has been edited replacing the original docker

image location at johntfoster/peridigm with the current location at

peridigm/peridigm. Additionally, the discussion related to parallel

computing can now be accomplished much easier by using docker-compose. See the Running Simulations with Peridigm Docker Image section of the Peridigm repository for more details.

If your a software engineer working in web development or cloud software deployment, you would have had to have your head in the sand for the last two years to have not heard of Docker by now. Briefly, Docker is a light-weight application container deployment service (think super low-overhead virtual machine, usually meant to contain one application and its dependencies), based on Linux containers. While there are 100s of articles describing Docker, its uses, many services, and even companies springing up around it; my colleagues in the scientific computing/high-performance computing (HPC) world are either not aware of Docker or don't see the utility, because it's hard to find significant information for or uses of Docker in this field. This post will hopefully demonstrate some of the utility of the service and promote its use in HPC and in academic settings. Additionally, as a contributor to the open-source software Peridigm, my hope it that the path demonstrated here might lower the courage required to try out the software and promote new users.

To provide some background/context for my own interest in Docker, in my current role as an academic advisor to graduate students and in my previous career as a researcher at Sandia National Laboratories I have often been a user and/or developer of scientific software that has a fairly complex dependent application stack. To use Peridigm, a massively parallel computational peridynamic mechanics simulation code, as an example, we have a large dependency on Trilinos. The packages we use from Trilinos have additional dependencies on a message passing interface (MPI) implementation (e.g. OpenMPI), Boost, and NetCDF. NetCDF has a dependency on HDF5, HDF5 on zlib, etc. All of these need a C/C++ compiler for building, of course, and if we are using the Intel compilers we might as well go ahead and use MKL libraries for efficiency. Trilinos uses CMake as a build system, so we need that around as well, at least during the build process. I'm sure there are others I am not thinking of at this moment. Figuring out and understanding these dependencies and learning to debug build issues can take a really long time for a budding computational scientist to master. Problems can be really compounded in a large multi-user HPC cluster where many different versions of compilers and libraries are present. Using UNIX modules goes a long way towards keeping things straight, but problems still arise that can be time consuming to troubleshoot. I usually have new graduate students go through the process of building Peridigm and all the dependencies for their own learning experience when they first join my group, in every case they struggle considerably the first few times the have to build the entire suite of software on a new machine. In some cases, particularly with MS level students, it can be a serious impediment to progress, as they are very short time lines to make meaningful research progress, and oftentimes they are only going to be end-users of the code, not developers. Almost certainly they are not going to make changes to any of the packages in Trilinos or any of the other dependencies outside of Peridigm. Enter Docker.

Docker allows for the ability to run applications in prebuilt containers on any Linux machine with Docker installed (or even Mac OS X and Windows via Boot2Docker). If your Linux distribution doesn't already have it installed, just use your package manager (e.g. apt-get install docker on Debian/Ubuntu based machines and yum install docker on Fedora/Redhat based machines). Docker is so ubiquitous at this point there are even entire Linux distributions like CoreOS being built specifically to maximize its strengths. Additionally, there is the Docker Hub Registry which provides Github like push/pull operations and cloud storage of prebuilt images. Of course, you or your organization can host your own Docker image registry as well (note: think of a Docker image like a C++ class, and a Docker container as a C++ object, or an instance of the image that runs). You can derive images from one another and this is what I have done in setting up an image of Peridigm. First, I have an image that starts with a baseline Debian based Linux and installs NetCDF and its dependencies. I have two tagged versions, a standard NetCDF build and a largefiles patched version which makes the large file modifications as suggested in the Peridigm build documentation. The NetCDF largefile image can be pulled to a Docker users local machine from my public Docker Hub Registry with

docker pull peridigm/netcdf:largefiles

the NetCDF image is built automatically via continuous integration with a Github repository that contains a Dockerfile with instructions for the image build process. I then derive a Trilinos image from the NetCDF largefiles image. This image contains only the Trilinos packages enabled such that Peridigm can be built. The Trilinos image can be pulled to a local machine with

docker pull peridigm/trilinos

this Trilinos image could be used to derive other images for other Trilinos based application codes which utilize the same dependent packages as Peridigm. The cmake build script which shows the active Trilinos packages in this image can be viewed here. This image is also built via continuous integration with this Github repository which can easily be modified to include more Trilinos packages. Finally, the Peridigm image can be pulled to a local machine with

docker pull peridigm/peridigm

this image is built from the Dockerfile found here, however it must be modified slightly to work with a local Peridigm source distribution if you want to build your own image because I do not want to distribute the Peridigm software source code as that is done by Sandia National Laboratories (see here if interested in getting the source). To run Peridigm you simply have to run the command:

docker run --name peridigm0 -d -v `pwd`:/output peridigm/peridigm \ Peridigm fragmenting_cylinder.peridigm

Even if you have not pulled the images locally from the Docker Hub Registry, this command will initiate the download process and run the command after downloading. Because the image contains all the necessary dependencies, it is around 2GB in size; therefore, it takes a while to download on the first execution. I have not made any attempts to optimize the image size, but it's likely it could be made smaller to speed this process up. Once you have the image locally, launching a container to run a simulation takes only milliseconds. The --name option gives the container the name peridigm0, this could be any name you choose and naming the container is not required, but makes for an easy way to stop the execution if you wish. If you need to stop the execution, simply run docker stop peridigm0. The -d option "detaches" the terminal so that the process runs in the background, you can reattach if needed with docker attach peridigm0. The -v option is critical for retrieving the data generated by the simulation. Docker handles data a little strangely, so you have to mount a local volume, in this case the current working directory returned by pwd, but in general it could any /path/to/data/storage. The local path is mounted to the volume /output that has been created in the Peridigm Docker image. You must place your input file, in this case fragmenting_cylinder.peridigm from the examples distributed with Peridigm, in your local shared mount for the Peridigm executable located in the Docker image to access it. As the simulation runs, you will see an output file fragmenting_cylinder.e appear in the shared mount. The other two arguments, peridigm/peridigm and Peridigm are the image name in the Docker Hub Registry and the executable, respectively.

To quickly recap, if all you want to test out Peridigm quickly, follow these 3 steps:

-

Install Docker via your package manager on Linux or utilize Boot2Docker on Mac OS X and Windows.

-

Place a Peridigm input file such as

fragmenting_cylinder.perdigmin a directory. -

Run the

docker run ...command above replacingpwdwith the directory name where you placed the file in 2., if not running from the current working directory.

That's it. No compiling, no dependencies. Most of the performance studies I've seen report a very small, 1-2% hit from the Dockerized version of an application over a natively installed application stack.

Of course, Peridigm is meant to be run in a massively parallel setting. A Docker container can take advantage of some multithreading, therefore you can also just run an MPI job right inside a Docker container, for Peridigm we can simply run

docker run --name peridigm0 -d -v `pwd`:/output peridigm/peridigm \ mpiexec -np 2 Peridigm fragmenting_cylinder.peridigm

for a possibly small performance gain. If you run this command, you should now see the domain decomposed output files, e.g. fragmenting_cylinder.e.0 and fragmenting_cylinder.e.1 appear in your mounted directory. However, this alone will not give you the true gains you expect form multicore CPUs or close to what you would see in a massively parallel HPC cluster. We can spawn a virtual cluster of Docker containers, from the peridigm/peridigm image and use MPI to communicate between them. To do this, we launch our virtual cluster with docker run ... but this time without any execution command. The default is for these containers to be ssh servers, so they will sit there idle until we need them for an MPI task. For example, if we want a 2 node job, we can run

docker run --name peridigm0 -d -P -h node0 -v `pwd`:/output peridigm/peridigm docker run --name peridigm1 -d -P -h node1 -v `pwd`:/output peridigm/peridigm

this launches containers named peridigm0 and peridigm1 that will have local (to their containers) machine names node0 and node1. Now we can find the containers IP addresses with the following command

docker inspect -f "{{ .NetworkSettings.IPAddress }}" peridigm0 docker inspect -f "{{ .NetworkSettings.IPAddress }}" peridigm1

These commands will return the ip addresses, just for demonstration purposes, let's assume that they are 10.1.0.124 and 10.1.0.125 respectively. Now we can launch mpiexec to run Peridigm on our virtual cluster. This time the containers are already running, so all we have to is run

docker exec peridigm0 mpiexec -np 2 -host 10.1.0.124,10.1.0.125 \ Peridigm fragmenting_cylinder.peridigm

which executes mpiexec on the container named peridigm0 and passes a host list to make MPI aware of where the nodes waiting for tasks are. You can have fine grained control over mpirun as well, for example if you wanted to run 2 MPI tasks per Docker container, you could do something like

docker exec peridigm0 mpiexec -np 4 -num-proc 2 -host 10.1.0.124,10.1.0.125 \ Peridigm fragmenting_cylinder.peridigm

when your finished you can stop and remove the containers with

docker stop peridigm0 peridigm1 docker rm -v peridigm0 peridigm1

the -v option to docker rm ensures that the mounted volumes are removed as well and there not any orphaned file system volume shares. I have written a Python script (shown below) that automates the whole process for an arbitrary number of Docker containers in a virtual cluster.

You can utilize this script to run Peridigm simulations on a virtual Docker cluster with the following command:

./pdrun --np 4 fragmenting_cylinder.peridigm --path=`pwd`

please consult the documentation of the script for more info about the options using ./pdrun -h. There are really only a few detials in between what I've done here and being able to deploy this on a production HPC cluster. The main difference would be that you would use a resource manager, e.g. SLURM to schedule running the Docker containers. You would also want to have both your mounted volume and a copy of the Docker image on a shared filesystem across all nodes of the cluster. Did I mention you can deploy your own private registry server?

The last point I would like to make about Docker is that, while I demonstrated that end users can benefit by eliminating the entire build process and move right to running the simulation code, also developers could greatly benefit from using a common development image and requiring only support for the Docker image, as it can be quickly deployed and tested against all platforms. How many times have your heard, even from experienced developers, after a commit fails in continuous integration, "It worked on my machine...". Docker offers a solution to those problems and many others.

A LaTeX Beamer template/theme for the Cockrell School of Engineering

This post is mainly aimed at my colleagues (and students) in the Cockrell School of Engineering at The University of Texas at Austin. As you may have gathered from other posts on this blog, I am a huge fan of LaTeX for typesetting documents. Recently, I had to give a talk within the Department and was asked polietly by our Communications Coordinator to use a provided MS PowerPoint template that conforms to the Visual Style Guide of the Cockrell School of Engineering.

I have many reasons for disliking PowerPoint that I will not go into here, but I have avoided using it at all costs for several years now, and did not want to start now. I also have an appreciation for branding and respect the desire to project a uniform brand from the Departments/School. My solution was to create my own LaTeX Beamer presentation style that replicates the Cockrell School PowerPoint as close as possible. I hacked it together relatively quickly for my talk which I delivered last week, but decided over the weekend to put together something a little more robust to share with my colleagues in the Cockrell School. The style file accepts arguments to input different department names and has some nice features for using BibTeX citations within the presentation.

The repository can be found cloned with git with the following command:

git clone git@github.com:johntfoster/cockrell-school-latex-beamer-template

it can also be found on GitHub. Feel free to fork it and extend it as you see fit. If you find any errors or add useful extensions, please send pull-requests.

You can also download a zip archive of the files : cockrell-school-latex-beamer-template-master.zip

An example of the PDF output results are shown below:

Prevent TeXShop from stealing focus from an external editor

I use Vim as my editor of choice for nearly all text editing activities including writing LaTeX documents. My usual workflow is to use a split window setup with tmux and run latexmk -pvc on my LaTeX file in one split-pane while editing the source file in the other split-pane. If your not familiar with latexmk it is a Perl script that, in a smart way, using minimal number of compile runs, keeps your LaTeX document output up-to-date with the correct cross-references, citations, etc. The -pvc option keeps it running in the background and recompiles with a detected change in any of the project files. I then use TeXShop as a PDF viewer because it has the nice ability to detect changes to the PDF output and autoupdate. Mac OS X's built-in Preview will do this as well, but you must click the window to enable the refresh. However, by default, TeXShop will steal focus from the editor window. This is annoying causing me to click or Command-Tab back to the Terminal to continue typing. I found a fix in a comment on Stack Exchange. If you type

defaults write TeXShop BringPdfFrontOnAutomaticUpdate NO

in the Terminal window this will disable the focus-stealing behavior and leave the window in the background so that it doesn't disrupt continuous editing.

Using Exodus.py to extract data from FEA database

This post will briefly show how you can use the exodus.py script distributed with Trilinos to extract data directly from an Exodus database. Exodus is a wrapper API on NetCDF that is specifically suited for finite element data. That is, it defines variables on nodes, elements, blocks of elements, sets of nodes, etc. The Exodus API is provided in both C and Fortran, exodus.py uses ctypes to call into the compiled Exodus C library.

First, I need to rearrange my install environment a little because exodus.py expects the NetCDF and Exodus compiled dynamic libraries to be in the same directory. On my machine they are not, so I will just create some symbolic links. It also expects the Exodus include file to be in a folder labeled inc, but on my machine it is labeled include so again, I will just create some symbolic links.

%%bash

ln -sf /usr/local/netcdf/lib/libnetcdf.dylib /usr/local/trilinos/lib/.

ln -sf /usr/local/trilinos/include /usr/local/trilinos/inc

If your /usr/local is not writable, you may need to use sudo to create the links. Also, I am on Mac OSX where dynamic libraries have a .dylib file extension. If you use Linux, you will need to change .dylib above to .so.

We also need to add the path of exodus.py to the active PYTHONPATH. We can do this from within the IPython session.

import sys

sys.path.append('/usr/local/trilinos/bin')

Now we can load the exodus class from exodus.py and instantiate a file object with a given filename. I will use the ViscoplasticNeedlemanFullyPrescribedTension_NoFlaw.h Exodus history database that is output from the Peridigm verification test of the same name.

from exodus import exodus

e = exodus('ViscoplasticNeedlemanFullyPrescribedTension_NoFlaw.h', mode='r', array_type='numpy')

Now we'll use the API calls to extract the data. First we'll get the time step values.

time_steps = e.get_times()

Now we can print the global variable names.

e.get_global_variable_names()

And use the global variable names to extract the data from the database. Since we used array_type='numpy' when we instantiated the file object above. The data is stored in numpy arrays.

vm_max = e.get_global_variable_values('Max_Von_Mises_Stress')

vm_min = e.get_global_variable_values('Min_Von_Mises_Stress')

Because in this example test we load at a constant strain-rate, we can easily convert the time-steps to engineering strain.

eng_strain_Y = time_steps * 0.001 / 1.0e-8

Now we can create a stress-strain curve

%matplotlib inline

import matplotlib.pyplot as plt

plt.plot(eng_strain_Y, vm_max, eng_strain_Y, vm_min);

plt.ylabel("Max/Min von Mises Stress");

I don't want the symbolic links I created earlier to cause any unexpected trouble for me later, so I will remove them.

%%bash

rm /usr/local/trilinos/lib/libnetcdf.dylib

rm /usr/local/trilinos/inc

Discrete error and convergence

I was asked a question today about a typical way to present the error between a numerical scheme and an exact solution, and the convergence of the method. I will demonstate one method that is typically used based on the $L_2$-norm of the error.

Consider the ODE

\begin{equation} \frac{\rm{d}x}{\rm{d}t} = x(t) \quad x(0) = 1 \end{equation}

which has the analytic solution

\begin{equation} x(t) = {\rm e}^t \end{equation}

I'm too lazy to code up anything much more sophisticated, so let's use an Euler explicit finite difference solution for $0 < t < 100$. We'll write a function that computes the $L_2$ norm of the error between the discrete solution and the exact solution. The formula for the norm is

$$ \Vert error \Vert_{L_2} = \sqrt{ \frac{1}{N} \sum_{i=1}^N(discrete(x_i) - exact(x_i))^2} $$

import numpy as np

def finite_diff_solution_err(N):

"""

Compute explicit finite difference solution and L2 error norm

input: N - number of time steps

output: normed error

"""

t = np.linspace(0., 100., num=N)

x = np.zeros_like(t)

n = np.arange(N)

x[0] = 1.0

x[1:] = (1.0 + (t[1:] - t[:-1])) ** (n[1:]) * x[0]

exact = np.exp(t)

err = np.sqrt(np.sum((x[:] - exact[:]) ** 2.0) / N)

return err

Now let's solve our problem for increasing degrees-of-freedom

dofs = np.array([10, 100, 1000, 10000, 100000])

errs = [finite_diff_solution_err(i) for i in dofs]

Finally, we plot the results as a function of step size $h$. If we fit a straight line to the data, we get an estimate of the convergence rate of the method, so we'll do that as well.

%matplotlib inline

import matplotlib.pyplot as plt

#Fit a straight line

coefs = np.polyfit(np.log10(1.0 / dofs), np.log10(errs), 1)

y = 10 ** (coefs[0] * np.log10(1.0 / dofs) + coefs[1])

#Plot

plt.loglog(1.0 / dofs, y, 'b-')

plt.loglog(1.0 / dofs, errs, 'b^')

plt.xlabel("$\log_{10} h$")

plt.ylabel("$\log_{10} \Vert error \Vert_{L_2}$");

The first term below is the slope of the least-square fit staight line which shows the convergence rate.

coefs

Managing a professional website with Nikola, LaTeX, Github and Travis CI

As an academic, ensuring that others are aware of and can easily access recent research results, papers, and other professional communications is an important part of the "branding" we are tasked with as quasi-business managers in running our research programs. Most of us keep an up-to-date Curriculum Vita (CV) for purposes of annual performance reviews, proposal submissions, etc. I maintain my own CV judiciously, updating it in near real-time after any new paper is published, talk is delivered, or award is given.

For several years, I have maintained my CV using the LaTeX typesetting system. I prefer the elegant typesetting of LaTeX, but mostly I prefer to keep my published papers stored in a BibTeX database and use the citations not only in my CV, but also in journal articles, and other forms of communication. Maintaining only one BibTeX database with all my papers reduces reproduction of work from paper-to-paper. I also learned at some point that I could use the htlatex utility distributed with TeXLive to convert my LaTeX CV into HTML for posting on the web.

I have recently designed a professional website to introduce myself, advertise my research, and include professional information and resources, one of them being my CV. After being a Wordpress user for many years, for this website I switched to the great static blog/website generator Nikola. I love the ability to edit plain text files in Vim using Markdown, ReST, or even a mix of Markdown, LaTeX, and HTML through pandoc integration. The icing-on-the-cake is the ability to create blog posts with the nascent IPython Notebook. Nikola also has built-in support for deploying to GitHub Pages, which while it's not difficult to home-roll a deployment scheme, this additional feature is nice for beginners using GitHub Pages.

Because I update my CV much more frequently than I would need to update the website in general, and have for sometime utilized git as a version control tool, I decided to push the CV repository to GitHub and see if I could devise a scheme to automate the workflow of keeping not only my CV up-to-date, but also regenerating my professional website automatically upon a git push to the CV repository. I found this to be pretty straightforward with the help of Travis CI. If your not familiar with Travis, it is a continuous integration system, typically used in software testing. It integrates seamlessly with GitHub, such that upon any git push to GitHub, Travis will pull a current version of the repository and run a set of commands specified in a travis.yml file. In summary, here is my workflow for keeping everything updated:

- Edit CV

- Commit changes to local repository and push to GitHub

- Travis builds CV in PDF and HTML versions

- Upon a successful build, Travis pushes new PDF and HTML versions to a branch on the CV repo

- Travis then triggers a rebuild of the professional website which is stored in it's own repo

- Travis then rebuilds the professional website with Nikola and includes the new HTML CV as a page

I'll now walk through the key parts of each step of the workflow:

Edit CV

I am assuming you have a LaTeX CV to edit or a template your working from. Feel free to use my own and modify it in anyway you like to suit your own needs/preferences. It's a good idea to ensure the CV builds locally first, I prefer to use latexmk for this. The following .latexmkrc works for me both locally on Mac OS X as well as on the Linux Ubuntu machines that Travis CI utilizes.

The \\\def\\\ispdf{1} part is because my cv.tex file has a definition statement in it that modifies the output slightly (regarding fonts) depending on whether you are requesting a PDF output or the HTML output. The extra backslash characters are to escape correctly in the bash shell. A default run of latexmk with this .latexmkrc file should create a PDF version of the CV. While counter-intuitive, a run of latexmk -pdf will actually produce an HTML version of the CV, this is because the pdflatex command has actually been redefined to htlatex on the second line of the .latexmkrc file. Even if the desire is to build an HTML version, latexmk must be run first to produce the correct cross-references and .aux file.

Commit changes to local repository and push to GitHub

There are so many great git and GitHub resources out there, I'm going to assume you know or can find out about basic git usage. There is one important think to note for the first commit to GitHub. You may run into problems if there is not an alternate branch of the repository for Travis to push the build results to. You can create a branch with the following command:

git checkout -b travis-build --orphan

This will create a new branch called travis-build with no history. You should then remove all the files in this branch, commit, and push to GitHub

git rm -rf * git commit -m "First commit to travis-build branch" git push origin travis-build

assuming the default origin name for your GitHub repository. Now switch back to your master branch, and push to GitHub, the travis.yml file in your repository (details covered below) will instruct Travis on how to build the repository

git checkout master git commit -am "A commit message detailing changes" git push origin master

Travis builds CV in PDF and HTML versions

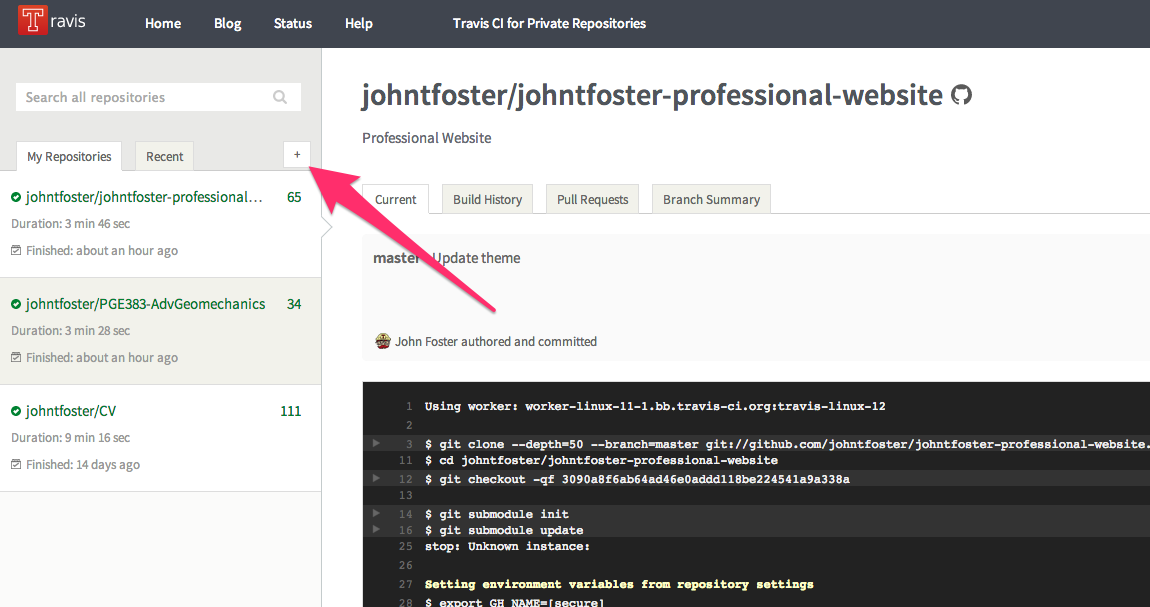

You will need to enable Travis CI for your repository. After signing into Travis via your GitHub username, you can add any public GitHub repository for free continuous integration services. You can add a repository by clicking on the + arrow in the left-hand panel of Travis as shown

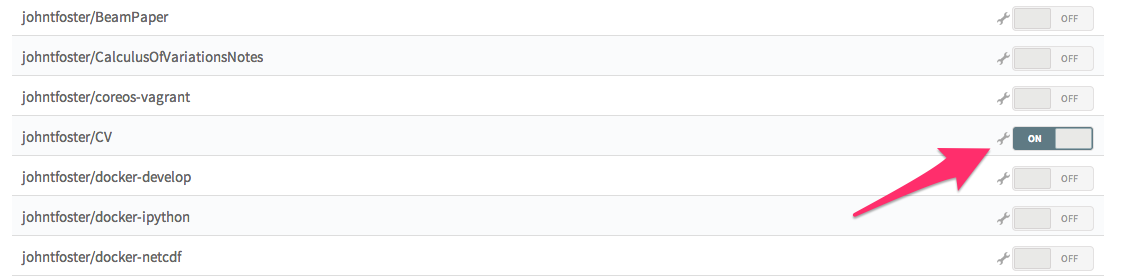

Then click the ON button to enable Travis.

Upon committing, Travis reads the travis.yml file in the repository and performs a set of actions. In this case, we want to build a LaTeX file which there is not a preinstalled Travis Ubuntu instance for LaTeX so we need to use apt-get to install all of the LaTeX dependencies and proper fonts for building the CV. The other commands just some basic Travis configuration, full details for Travis configuration can be found here

Once all of the dependencies are installed, Travis will build both the PDF and HTML versions of the CV with

After a successful build, Travis will clone the previously created travis-build branch, copy the newly created PDF and HTML files into this branch, commit them, and push them back to GitHub. This requires setting up a few environment variables, that can be added to the Travis project page. The variables are GIT_EMAIL, GIT_NAME, GH_TOKEN, and TRAVIS_TOKEN. The first two just correspond to basic git configuration and are really just used to store the information of the person who make the commit in the git history. Since it's actually Travis making the commit, I just define the variables as

GIT_NAME="Travis CI" GIT_EMAIL=travis-ci@travis.org

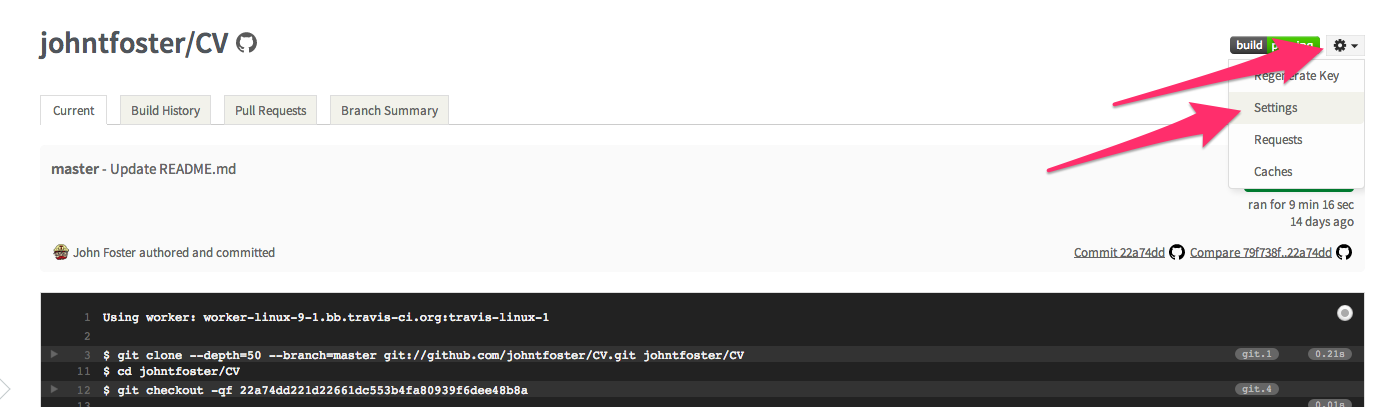

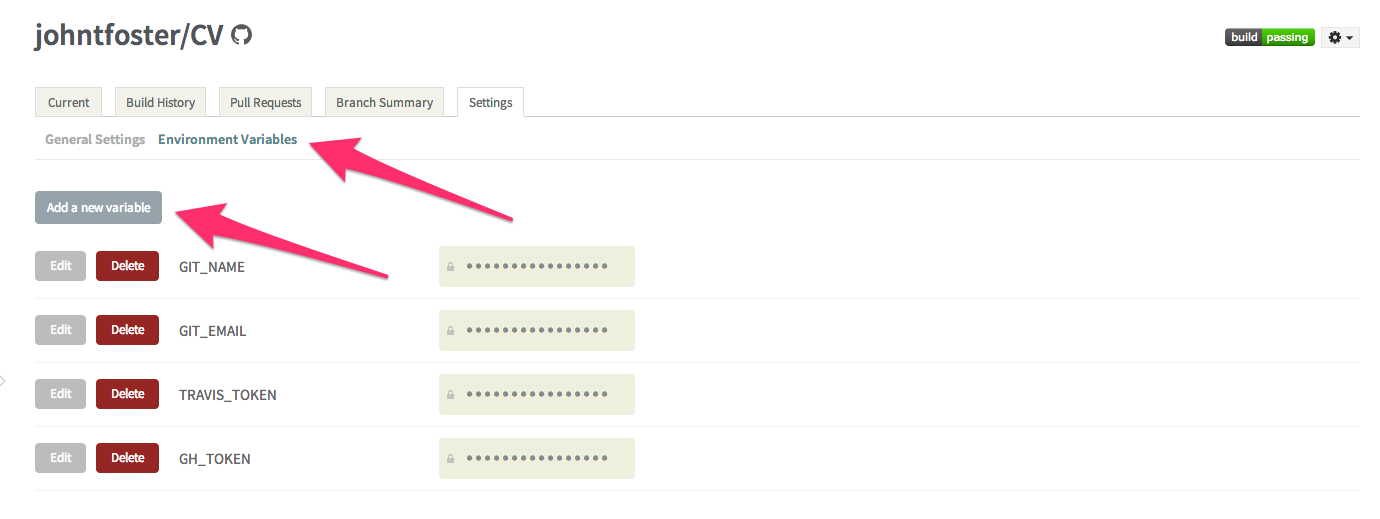

The environment variables can be set on the Travis setting page as shown

then click on the environment variables tab and select "Add a new variable"

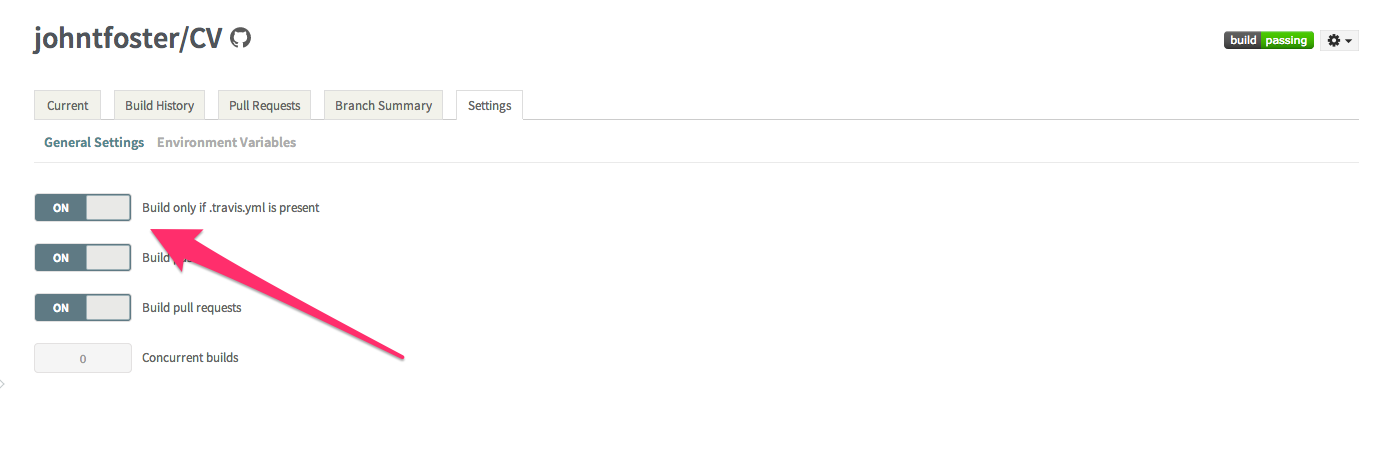

while on the settings page, it is also useful to select "Build only if .travis.yml is present" this will prevent Travis from automatically attempting to build on repository branches that do not have a .travis.yml file, as is the case with the travis-build branch we created earlier. These environment variables are stored encrypted on the Travis servers.

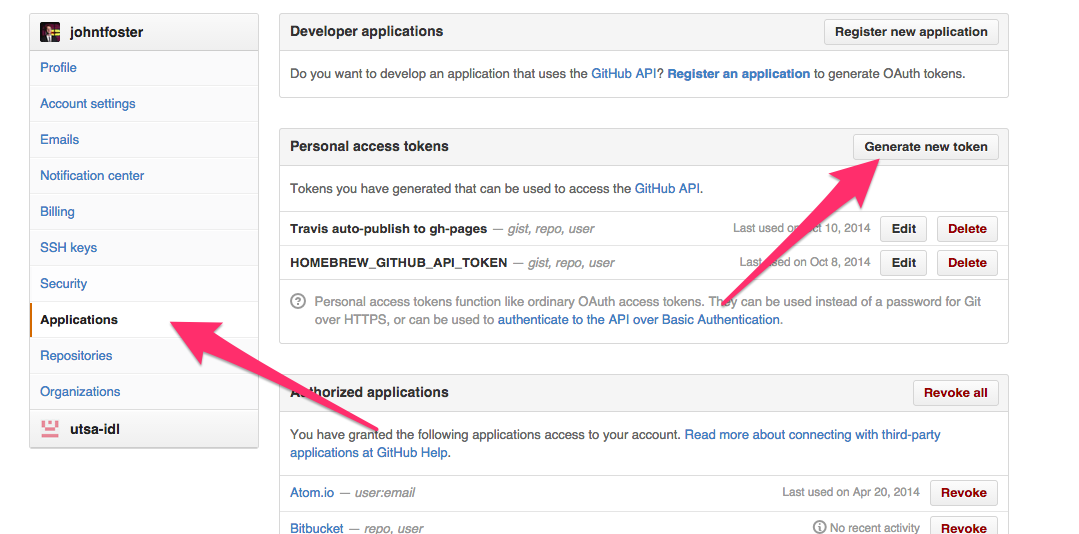

The other two environment variables, GH_TOKEN and TRAVIS_TOKEN are more critical as they allow the Travis the proper credentials to commit back to Github and to issue a new build of the website for which the CV will be included. The get a GitHub token, go to your GitHub settings page, click on Applications on the left-hand panel and then "Generate new token", give the token a name and copy or write down the token somewhere safely.

This will be the only time you can actually see the token so make sure you have it copied somewhere. You can use the same token form multiple Travis jobs, but you have to know what it is and cannot reveal it again later. Use this token to set the GH_TOKEN environment variable back on the Travis site. Finally, you can get a TRAVIS_TOKEN through the Ruby Travis command line interface. If you have Ruby installed you can install Travis like any Ruby gem with

gem install travis

Then you can find your Travis access token with

travis token

copy the result and add the final environment variable into your Travis settings page just like the others before.

Upon a successful build, Travis pushes new PDF and HTML versions to a branch on the CV repo

Now with the environment variables set, the last part of the .travis.yml file can be executed to publish the built PDF and HTML files to the travis-build branch.

This issues a git commit with a message that includes the current Travis build number via the default ${TRAVIS_BUILD_NUMBER} environment variable. The ${GH_TOKEN} environment variable establishes the ability to push back to origin, i.e. GitHub without an explicit login.

Travis then triggers a rebuild of the professional website which is stored in it's own repo

The trigger is what occurs in the last two lines of the .travis.yml file. First we have to install the Travis command line client on Travis via Ruby gem. We are ensured that Ruby is installed by specifying language: ruby in the first line of .travis.yml. Then we execute the trigger. The entry following the -r option specifies the GitHub repository to trigger a Travis rebuild on, in this case johnfoster-pge-utexas/johnfoster-pge-utexas.github.io.

This completes the process of automatically having Travis build the CV and publishing back to GitHub. The complete .travis.yml file is now shown

Because of the need to install all of the large LaTeX dependencies into the Travis Ubuntu image before compiling, this whole process takes about 10 minute on Travis, a little long to just compile a simple LaTeX file, but if one needs a speedy deployment the steps can always be completed manually. Hopefully, one day Travis will consider adding a LaTeX image to their pre-installed language images. This would likely cut down the compile time to only a minute or two at most.

Travis then rebuilds the professional website with Nikola and includes the new HTML CV as a page

As mentioned earlier, I recently moved to the static site generator Nikola. After many years using Wordpress, I got tired of unnecessary database configurations (unnecessary because I mostly just had static pages) and the inability to edit posts in plain text. After a little searching, and having a preference for a static blog site that was extendable through my favorite programming language Python, I narrowed it down to Pelican and Nikola. I experimented with each of them and decided on Nikola because I felt like the codebase was a little easier to understand in the case I would want to extend it in some way, and did not have the Sphinx dependence that Pelican has. There are several good tutorials on Nikola use, so I will not address this here; however, one nice feature of Nikola is that is has the ability to use the nascent pandoc as a document compiler. Pandoc's Markdown is a superset of standard markdown and is quite a bit more flexible than standard Markdown allowing you to mix standard Markdown, LaTeX math, and raw HTML markup. Nikola doesn't require pandoc, but I would like the ability to use it, so we first need to install it as a dependency. Pandoc is written in Haskell, so it would require several steps and a lengthy install, thankfully the guys at RStudio have a set of compiled binaries that will work when installed into Travis, these are what the first few lines of .travis.yml in the johnfoster-pge-utexas.github.io repository are doing.

Nikola can be installed via pip in Python. Because I also want to keep the option open to blog in IPython, there are also IPython and all the scientific Python stack dependencies that need to be installed. To install all of these dependencies we use a requirements.txt file.

Then we can build the website

In order to get the PDF and HTML versions of the CV that was built and posted earlier to show as a page in this website, I use a ReStructured Text format document which Nikola can compile, using the raw html directive

.. raw:: html :url: https://raw.githubusercontent.com/johntfoster/CV/travis-build/cv.html

which produces this page when compiled by Nikola.

The last part of the .travis.yml is very similar to what was described previously for the CV, only now we push to a branch gh-pages because we actually want GitHub to serve the website. Details on using GitHub pages can be found here. The entire .travis.yml file is shown below.

After this file is run successfully by Travis, the website will be served on GitHub. My site can be seen here. The entire process from pushing a change on the CV, to the professional website being completely rebuilt and updated usually takes around 10-15 minutes. Again, if there were ever a need to have an instant update, there is always the option to push the changes by hand. Please feel free to use any or all of the tips/code presented in your own workflow.